We are trying to train a VowpalWabbit (VW) model in an online-fashion. However, due to our system architecture and needs to scale, we have to train batch-wise and cannot do an online-update each time we predict.

My question is, what is the best way to approach this in regards of convergence. Is there maybe a special mode to run VW for this?

What we currently do:

Init Model:

--cb_explore_adf -q UA -q UU --quiet --save_resume --bag 10 --epsilon 0.1

Inference (separately):

- Load vw model

- Predict -> action-distribution

- Sample randomly from action-distribution and playout

Training (separately)(triggered periodically):

- Load model

- Load training data (action, context, reward) (reward=1.0 if article_clicked else 0) (from-time=last-train-time, end-time=current-time)

- Update model individually (always using probability 1.0). Example training instance:

shared |User device=device-1

|Action ad=article-1

1:0:1 |Action ad=article-2

|Action ad=article-3

|Action ad=article-4

- Store vw model

Is there something wrong with this approach? I suspect that providing the training data in batches and doing a per-instance update on these is not what the exploration algorithm expects. In offline experiments batch-wise training seems often slightly worse vs. non-batch training.

EDIT To the question which probability to use when learning, we tested the usage of the probability generated by the model during prediction and always using 1.0 (on a Toy game, batch_size=500):

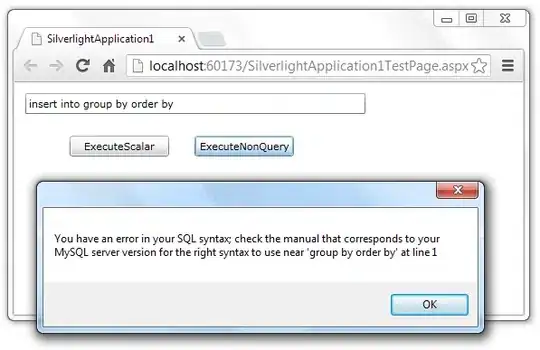

Using probability of prediction for training:

EDIT 2

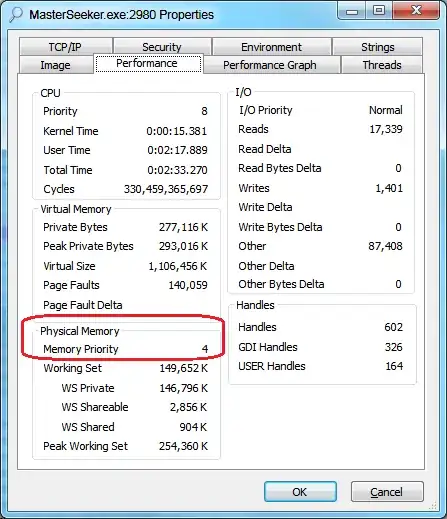

Adding another graphic, comparing Vowpal Wabbit models using probability of prediction vs always using probability of 1.0 for training. Top-Graph: X-Axis: Number of samples, Y-Axis: total CTR (click-through-rate basically the total average reward). Bottom-Graph: Histogram of actions (1-3) chosen by the different algorithms.

The Chart is comparing 8 Vowpal Wabbit models. 4 models which always use 1.0 for the training as probability. 4 models use the probability of prediction. Always using 1.0 seems to perform consistently better.

Additional Explanation for Graphics:

The models are competing in a Toy Problem for contextual algorithms, where a context is presented (6 different pseudo device types) with three possible actions (also called variant). The CTRs look as follow:

problem_definition = {

"device-1": {"variant-1": 0.05, "variant-2": 0.06, "variant-3": 0.04}, # 2

"device-2": {"variant-1": 0.08, "variant-2": 0.07, "variant-3": 0.05}, # 1

"device-3": {"variant-1": 0.01, "variant-2": 0.04, "variant-3": 0.09}, # 3

"device-4": {"variant-1": 0.04, "variant-2": 0.04, "variant-3": 0.045}, # 3

"device-5": {"variant-1": 0.09, "variant-2": 0.01, "variant-3": 0.07}, # 1

"device-6": {"variant-1": 0.03, "variant-2": 0.09, "variant-3": 0.04} # 2

}

The toy-game's simulation-logic:

def simulate(self, device, variant):

assert variant in self.action_space()

ctr = self.problem_definition[device][variant]

if random.random() < ctr:

reward = 1.0

else:

reward = 0.0

return reward

The goal of the toy game is to choose the action with the highest CTR given the device type.

Example of how a training example for Vowpal Wabbit is formatted:

shared |User device=device-4

|Action ad=variant-1

|Action ad=variant-2

0:0.0:1.00 |Action ad=variant-3

And another one, not using static 1.0 probability:

shared |User device=device-1

|Action ad=variant-1

0:0.0:0.93 |Action ad=variant-2

|Action ad=variant-3

All models were initialized the same:

--cb_explore_adf -q UA -q UU --quiet --save_resume --epsilon 0.100