With a uint8, 3-channel image and uint8 binary mask, I have done the following in opencv and python in order to change an object on a black background into an object on a transparent background:

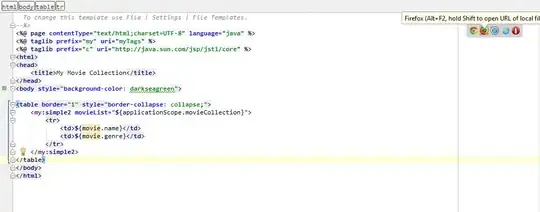

# Separate image into its 3 channels

b, g, r = cv2.split(img)

# Merge channels back with mask (resulting in a 4-channel image)

imgBGRA = cv2.merge((b, g, r, mask))

However, when I try doing this with a uint16, 3-channel image and uint16 binary mask, the saved result is 4-channel, but the background is still black. (I saved it as a .tiff file and viewed it in Photoshop.)

How can I make the background transparent, keeping the output image uint16?

UPDATE

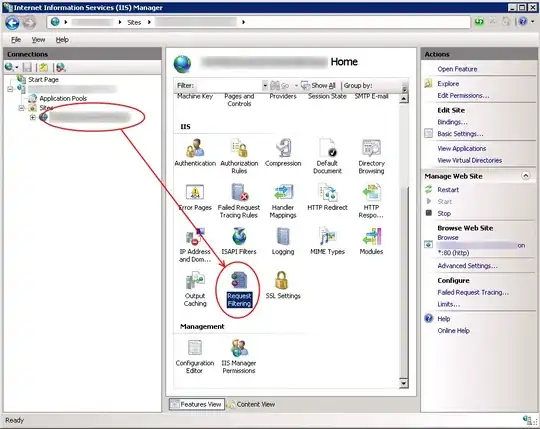

Seeing @Shamshirsaz.Navid and @fmw42 comments, I tried

imgBGRA=cv2.cvtColor(imgBGR, cv2.COLOR_BGR2BGRA). Then used Numpy to add the alpha channel from the mask: imgBGRA[:,:,3]=mask. (I hadn't tried this, as I thought that cvtColor operations required an 8-bit image.) Nonetheless, my results are the same.

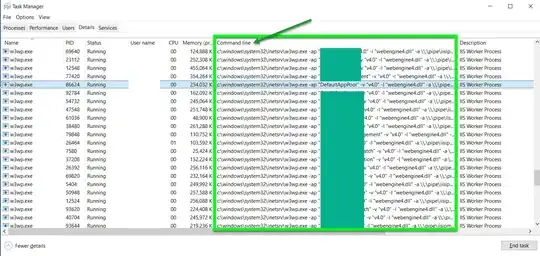

I think the problem is my mask. When I run numpy.amin(mask), I get 0, and for numpy.amax(mask), I get 1. What should they be? I tried multiplying the mask by 255 prior to using the split/merge technique, but the background was still black. Then I tried mask*65535, but again the background was black.

I had tried to keep the scope of my initial post narrow. But it seems that my problem does lie somewhere in the larger scope of what I'm doing and how this uint16 mask gets created.

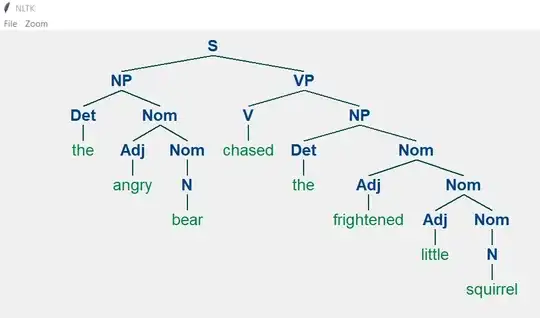

I'm using connectedComponentsWithStats (CC) to cut out the components on a uint16 image. CC requires an 8-bit mask, which I am using as input to CC. But the cutout results need to be from my uint16 original. This has required some alterations to the way I learned to use CC on uint8 images. Note that the per-component mask (which I eventually use to try to make the background transparent) is created as uint16. Here is the whittled down version:

# img is original image, dtype=uint16

# bin is binary mask, dtype=uint8

cc = cv2.connectedComponentsWithStats(bin, connectivity, cv2.CV_32S)

num_labels = cc[0]

labels = cc[1]

for i in range(1, num_labels):

maskg = (labels == i).astype(np.uint16) # with uint8: maskg = (labels == i).astype(np.uint8) * 255

# NOTE: I don't understand why removing the `* 255` works; but after hours of experimenting, it's the only way I could get the original to appear correctly when saving 'glyph'; for all other methods I tried the colors were off in some significant way -- either grayish blue whereas the object in my original is variations of brown, or else a pixelated rainbow of colors)

glyph = img * maskg[..., np.newaxis] # with uint8: glyph = cv2.bitwise_and(img, img, mask=maskg)

b, g, r = cv2.split(glyph)

glyphBGRA = cv2.merge((b, g, r, maskg))

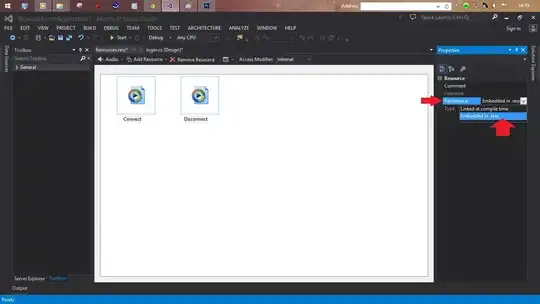

example (my real original image is huge and, also, I am not able share it; so I put together this example)

img (original uint16 image)

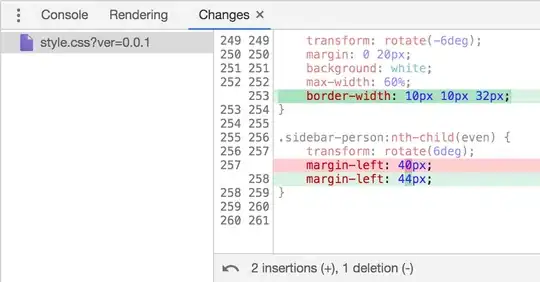

bin (input uint8 mask)

maskg (uint16 component mask created within loop) (this is a screenshot -- it shows up all black when uploaded directly)

glyph (img with maskg applied)

glyphBGRA (result of split and merge method trying to add transparency) (this is also a screenshot -- this one showed up all white/blank when added directly)

I hope this added info provides sufficient context for my problem.