I am trying to split large json files into smaller chunks using azure data flow. It splits the file but it changes column type boolean to string in output files. This same data flow will be used for different json files with different schemas therefore can't have any fixed schema mapping defined. I have to use auto mapping option. Please suggest how could I solve this issue of automatic datatype conversion? or Any other approach to split the file in the azure data factory?

Asked

Active

Viewed 2,242 times

2

Akhilesh Jaiswal

- 227

- 2

- 14

-

is it possible to add derived column before sync and then modifying the type of original source properties so that you can use Auto Mapping ? – Sarang Kulkarni Aug 23 '21 at 05:28

2 Answers

1

In your ADF Data Flow Source transformation, click on the Projection Tab and click "Define default format". Set explicit values for Boolean True/False so that ADF can use that hint for proper data type inference for your data.

Mark Kromer MSFT

- 3,578

- 1

- 10

- 11

0

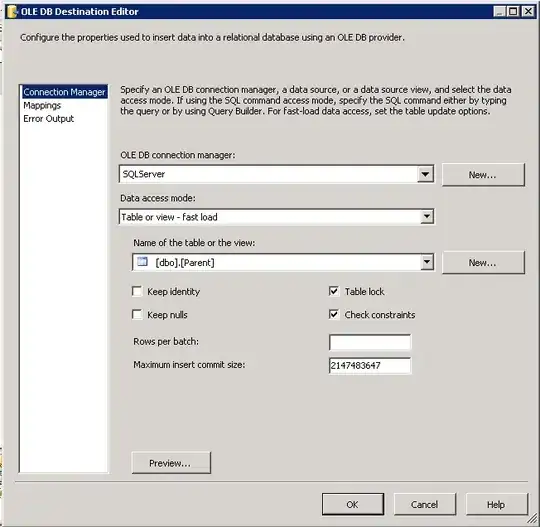

Here, with my dataset I have tried to have a source as a json file and Sink as a json. If you have a fixed Schema and import it then the data flow works fine and could return a boolean value after running the pipeline.

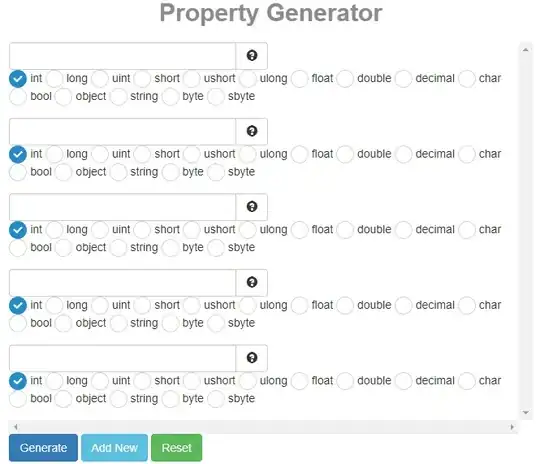

But as you stated to have "same data flow will be used for different json files with different schemas therefore can't have any fixed schema mapping defined". Hence, you must have a Derived Column to explicitly covert all to boolean values.

Import Schema :

**In the sink you could inspect :

Data Preview :

IpsitaDash-MT

- 1,326

- 1

- 3

- 7