We have a Spring Boot application on GKE with auto-scaling (HPA) enabled. During startup, HPA kicks in and start scaling the pods even though there is no traffic. Result of 'kubectl get hpa' shows high current CPU average utilization while CPU utilization of nodes and PODs is quite low. The behavior is same during scaling up, and multiple Pod are created eventually leading to Node scaling.

Application deployment Yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-api

labels:

app: myapp

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

serviceAccount: myapp-ksa

containers:

- name: myapp

image: gcr.io/project/myapp:126

env:

- name: DB_USER

valueFrom:

secretKeyRef:

name: my-db-credentials

key: username

- name: DB_PASS

valueFrom:

secretKeyRef:

name: my-db-credentials

key: password

- name: DB_NAME

valueFrom:

secretKeyRef:

name: my-db-credentials

key: database

- name: INSTANCE_CONNECTION

valueFrom:

configMapKeyRef:

name: connectionname

key: connectionname

resources:

requests:

cpu: "200m"

memory: "256Mi"

ports:

- containerPort: 8080

livenessProbe:

httpGet:

path: /actuator/health

port: 8080

initialDelaySeconds: 90

periodSeconds: 5

readinessProbe:

httpGet:

path: /actuator/health

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

- name: cloudsql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.17

env:

- name: INSTANCE_CONNECTION

valueFrom:

configMapKeyRef:

name: connectionname

key: connectionname

command: ["/cloud_sql_proxy",

"-ip_address_types=PRIVATE",

"-instances=$(INSTANCE_CONNECTION)=tcp:5432"]

securityContext:

runAsNonRoot: true

runAsUser: 2

allowPrivilegeEscalation: false

resources:

requests:

memory: "128Mi"

cpu: "100m"

Yaml for HPA:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: myapp-api

labels:

app: myapp

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp-api

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

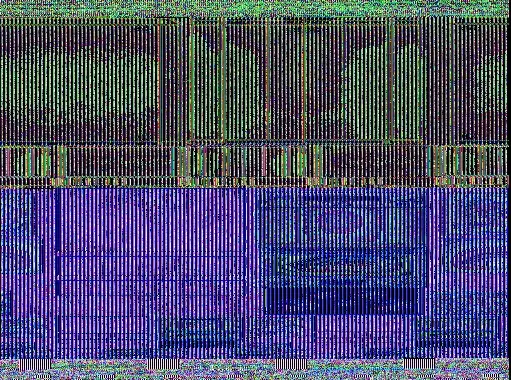

result of various commands:

$ kubectl get pods

$ kubectl get hpa

$ kubectl top nodes

$ kubectl top pods --all-namespaces