Create an Azure Data Factory pipeline to transfer files between an on-premises machine and Azure Blob Storage

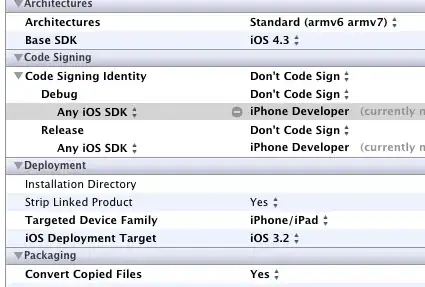

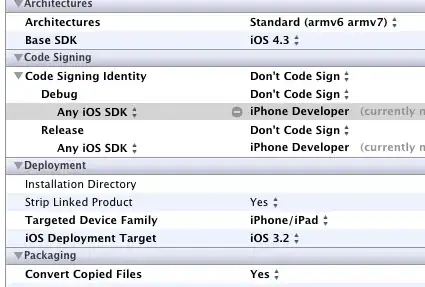

1) To add a source linked service, open the 'Connections' tab on the 'Factory Resources' panel, add new connection and select the 'File System' type from the ADF authoring screen.

2) Assign the name to the linked service name, select 'OnPremIR' from the integration runtime drop-down list, enter your domain user name in the format of 'USERNAME@DOMAINNAME', as well as a password. Finally, hit the 'Test connection' button to ensure ADF can connect to your local folder

3) To add a destination linked service, add a new connection and select Azure Blob Storage type

4) Assign the name for the linked service, select 'Use account key' as the authentication method, select Azure subscription and storage account name from the respective drop-down lists.

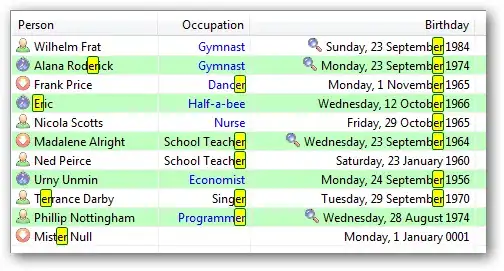

5) Now that we have linked services in place, we can add the source and destination datasets, here are the required steps.

- To add source dataset, press '+' on 'Factory Resources' panel and select 'Dataset'.

- Open 'File' tab, select 'File System' type and confirm.

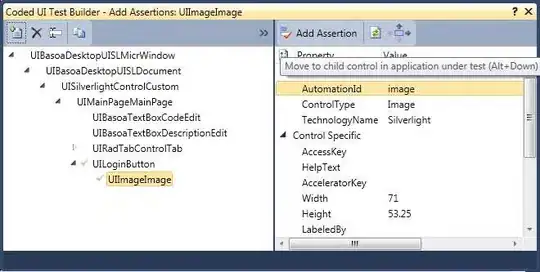

6) Assign the name to newly created dataset (I named it 'LocalFS_DS') and switch to the 'Connection' tab.

7) Finally, open the 'Schema' tab and hit the 'Import Schema' button to import files

- Similar to the source dataset, hit '+' on 'Factory Resources' panel

and select 'Dataset', to add the destination dataset.

- Select the 'Azure Blob Storage' type and confirm.

- Enter dataset name (I named it 'BlobSTG_DS') and open 'Connection'

tab

- Select blob storage

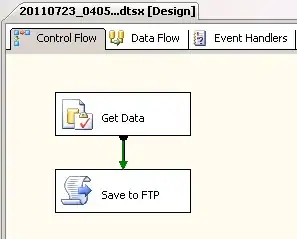

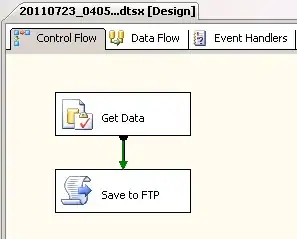

8) The last step in this process is -adding pipeline and activity.

- Press '+' on 'Factory Resources' panel and select 'Pipeline'.

- Assign the name to the pipeline (I named it as 'OnPremToBlob_PL').

- Expand 'Move & Transform' category on the 'Activities' panel and drag

& drop 'Copy Data' activity onto central panel.

- Select newly added activity and assign the name (I named it

'FactInternetSales_FS_BS_AC').

- Switch to 'Source' tab and select LocalFS_DS dataset we created

earlier.

- Switch to 'Sink' tab and select 'BlobSTG_DS' dataset we created

earlier.

9) Publishing these changes-hit 'Publish All' and check notifications area for deployment status. using the 'Trigger Now' command under the 'Trigger' menu

.

.

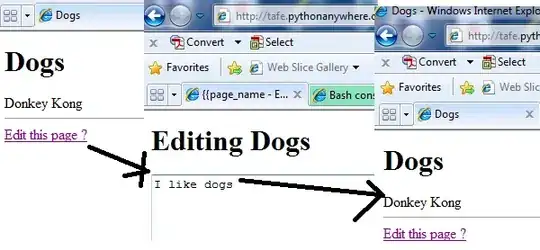

To check the execution results, open ADF Monitoring page and ensure that pipeline's execution was successful.

To verify that files have been transferred successfully, switch to the your blob storage screen and open the your container.

For more details refer this document