Im working on extending existing flutter cameraPlugin for IOS.. What do I basically need:

- catch live-preview frame.

- process it (in my case apply some openCV logic), return UIImage

- convert it into the CvPixelBuffer and return it to the flutter.

Here I found great explanation how to does Flutter communicate with Objective-C camera.

Step 1: inside captureOutput I caught a frame, applied some interaction logic, which returns edited UIImage, converted to the CvPixelBuffer, but unfortunately it doesn't work. Returned image could be nil, in such case I should return default preview frame (which works fine).

UIImage* image = [self imageFromSampleBuffer: sampleBuffer];

InteractingResultContainer* resultContainer = [_cameraPreviewInteractor operateOnImageFrame: image];

if(resultContainer.image != nil) {

CVPixelBufferRef editedBuffer = [self pixelBufferFromCGImage: resultContainer.image.CGImage];

CFRetain(editedBuffer);

CVPixelBufferRef old = _latestPixelBuffer;

while (!OSAtomicCompareAndSwapPtrBarrier(old, editedBuffer, (void **)&_latestPixelBuffer)) {

old = _latestPixelBuffer;

}

if (old != nil) {

CFRelease(old);

}

}

else {

CVPixelBufferRef newBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CFRetain(newBuffer);

CVPixelBufferRef old = _latestPixelBuffer;

while (!OSAtomicCompareAndSwapPtrBarrier(old, newBuffer, (void **)&_latestPixelBuffer)) {

old = _latestPixelBuffer;

}

if (old != nil) {

CFRelease(old);

}

}

if (_onFrameAvailable) {

_onFrameAvailable();

}

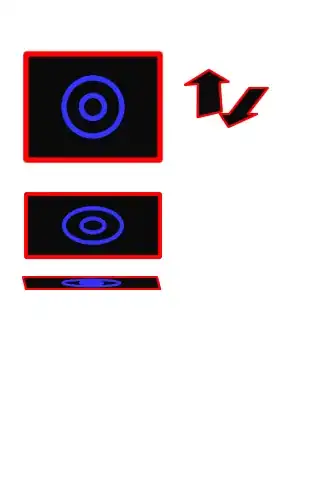

However, debug breakpoints telling that they're returning right (edited, with red circle drawn) cvPixelBuffers to the Flutter:

Origin Flutter's Camera Plugin Source Working w/ captureOutput method, (line 592)