I have a collection of grayscale images in a NumPy array. I want to convert the images to RGB before feeding them into a CNN (I am using transfer learning). But when I try to convert the images to RGB, almost all information in the image is lost! I have tried several libraries, but all of them lead to (almost) the same result:

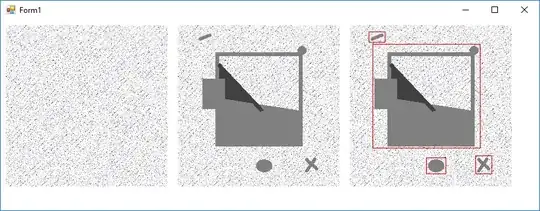

Original Image:

import matplotlib.pyplot as plt

plt.imshow(X[0])

Using cv2:

import cv2

img = X[0].astype('float32') #cv2 needs float32 or uint8 for handling images

rgb_image = cv2.cvtColor(img,cv2.COLOR_GRAY2RGB)

plt.imshow(rgb_image)

img = X[0].astype('uint8') #cv2 needs float32 or uint8 for handling images

rgb_image = cv2.cvtColor(img,cv2.COLOR_GRAY2RGB)

plt.imshow(rgb_image)

Using PIL

from PIL import Image

img = Image.fromarray(X[0])

img_rgb = img.convert("RGB")

plt.imshow(img_rgb)

Using NumPy

from here: https://stackoverflow.com/a/40119878/14420572

stacked_img = np.stack((X[0],)*3, axis=-1)

plt.imshow(stacked_img)

What should I do to make sure the images are converted without quality loss?