We have created a Hudi dataset which has two level partition like this

s3://somes3bucket/partition1=value/partition2=value

where partition1 and partition2 is of type string

When running a simple count query using Hudi format in spark-shell, it takes almost 3 minutes to complete

spark.read.format("hudi").load("s3://somes3bucket").

where("partition1 = 'somevalue' and partition2 = 'somevalue'").

count()

res1: Long = ####

attempt 1: 3.2 minutes

attempt 2: 2.5 minutes

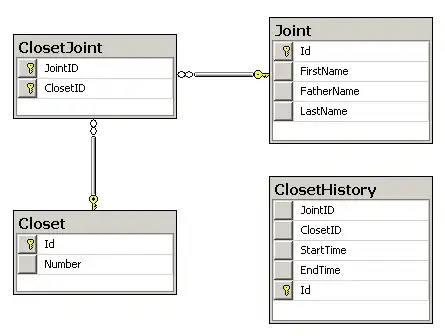

Here is also the metrics in Spark UI where ~9000 tasks (which is approximately equivalent to the total no of files in the ENTIRE dataset s3://somes3bucket) are used for computation. Seems like spark is reading the entire dataset instead of partition pruning....and then filtering the dataset based on the where clause

Whereas, if I use the parquet format to read the dataset, the query only takes ~30 seconds (vis-a-vis 3 minutes with Hudi format)

spark.read.parquet("s3://somes3bucket").

where("partition1 = 'somevalue' and partition2 = 'somevalue'").

count()

res2: Long = ####

~ 30 seconds

Here is the spark UI, where only 1361 files are scanned (vis-a-vis ~9000 files in Hudi) and takes only 15 seconds

Any idea why partition pruning is not working when using Hudi format? Wondering if I am missing any configuration during the creation of the dataset?

PS: I ran this query in emr-6.3.0 which has Hudi version 0.7.0