I am using OpenCV2 to work on an auto-block function for a game, so simply: If the red indicator that shows up in a specific region has a max_val higher than threshold, press the key specified to block that attack.

There is a template of the indicator with a transparent background, it works on few screenshots but not most of the others, however.

Here is the data I'm using:

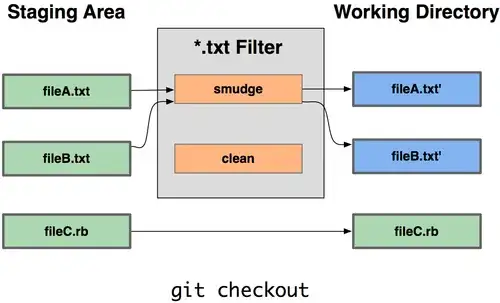

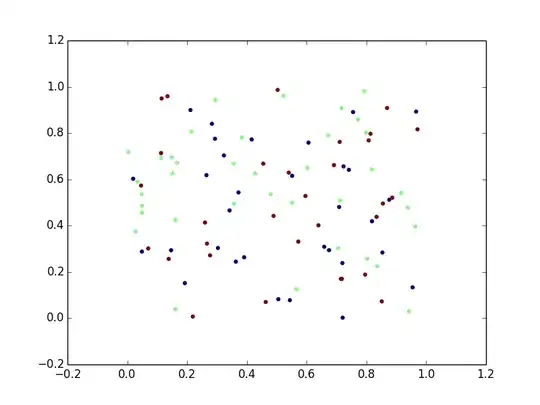

Template:

Screenshot where it successfully detects:

Screenshot where it fails to detect:

Code to detect:

import time

import cv2

import pyautogui

import numpy as np

def block_left():

# while True:

# screenshot = pyautogui.screenshot(region=(960, 455, 300, 260))

# region = cv2.imread(np.array(screenshot), cv2.IMREAD_UNCHANGED)

region = cv2.imread('Screenshots/Left S 1.png', cv2.IMREAD_UNCHANGED)

block = cv2.imread(r'Block Images/Left Block.png', cv2.IMREAD_UNCHANGED)

matched = cv2.matchTemplate(region, block, cv2.TM_CCOEFF_NORMED)

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(matched)

print(max_val)

w = block.shape[1]

h = block.shape[0]

cv2.rectangle(region, max_loc, (max_loc[0] + w, max_loc[1] + h), (255, 0, 0), 2)

cv2.imshow('Region', region)

cv2.waitKey()

block_left()

So, in conclusion, I have tried multiple other methods but all have shown less successful outcomes. Are there any filters, any processing that I can add in order to fix this? Thank you.

Images uploaded are in 8-bit, however images used are 32-bit, but couldn't upload due to size, 32-bit images used are uploaded here: https://ibb.co/r7B7G6B https://ibb.co/r0r9w5T https://ibb.co/KXP3wWc