I'm tuning my models with GridSearchCV. There are multiple scoring metrics used - precision, recall and accuracy. However, when I inspect the cv_results_, all 3 metrics give the same score values.

Here is my code.

model = RandomForestClassifier(random_state=0)

param_grid = {

'max_depth': range(5, 11, 1),

'max_features': range(10, 21, 1),

'n_estimators': [10, 100],

'min_samples_leaf':[0.1, 0.2, 0.3]

}

scorers = {

'precision_score': make_scorer(precision_score, average='micro'),

'recall_score': make_scorer(recall_score, average='micro'),

'accuracy_score': make_scorer(accuracy_score)

}

grid_search = GridSearchCV(model, param_grid = param_grid,

scoring=scorers, refit='precision_score', return_train_score=True,

cv = 3, n_jobs = -1, verbose = 3)

grid_search.fit(X,y)

The scores returned by cv_results:

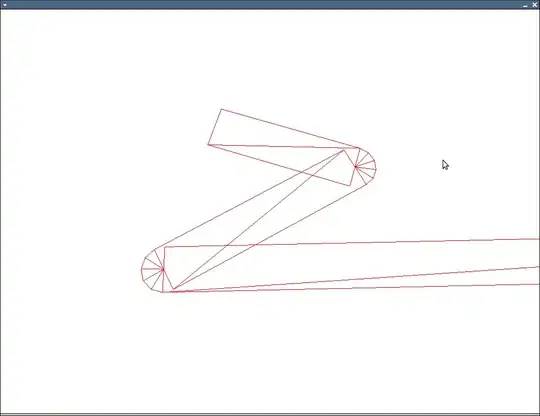

pd.DataFrame(grid_search.cv_results_)[['mean_test_precision_score', 'mean_test_recall_score', 'mean_test_accuracy_score']].head(10)

The way I made my X and y (if that matters):

X = data_merge.drop('label', axis=1)

le = preprocessing.LabelEncoder()

y = le.fit_transform(data_merge['label'])

I'm running Scikit Learn 0.24.2 and Python 3.8.10