There is an imbalance two class classification problem with 12750 samples for class 0 and 2550 samples for class 1. I've gotten class weights using class_weight.compute_class_weight and fed them to model.fit. I've tested many loss and optimizer functions. The accuracy on test data is reasonable but loss and accuracy curves aren't normal, which are shown as below. I was wonder if some one give me a suggestion that how can I smooth the curves and fix this problem.

Thank you

import tensorflow as tf

import keras

import numpy as np

from keras.models import Sequential

from keras.layers import Dense, Flatten

from keras.layers import Conv2D, MaxPooling2D, UpSampling2D,Dropout, Conv1D

from sklearn.utils import class_weight

import scipy.io

from sklearn.model_selection import train_test_split

from sklearn.utils import shuffle

import sklearn.metrics as metrics

from sklearn.utils import class_weight

#General Variables

batch_size = 32

epochs = 100

num_classes = 2

#Load Data

# X_p300 = scipy.io.loadmat('D:/P300_challenge/BCI data- code 2005/code2005/p300Cas.mat',variable_names='p300Cas').get('p300Cas')

# X_np300 = scipy.io.loadmat('D:/P300_challenge/BCI data- code 2005/code2005/np300Cas.mat',variable_names='np300Cas').get('np300Cas')

X_p300 = scipy.io.loadmat('/content/drive/MyDrive/p300/p300Cas.mat',variable_names='p300Cas').get('p300Cas')

X_np300 = scipy.io.loadmat('/content/drive/MyDrive/p300/np300Cas.mat',variable_names='np300Cas').get('np300Cas')

X_np300=X_np300[:,:]

X_p300=X_p300[:,:]

X=np.concatenate((X_p300,X_np300))

X = np.expand_dims(X,2)

Y=np.zeros((15300,))

Y[0:2550]=1

#Shuffle data as it is now in order by row colunm index

print('Shuffling...')

X, Y = shuffle(X, Y)

#Split data between 80% Training and 20% Testing

print('Splitting...')

x_train, x_test, y_train, y_test = train_test_split(

X, Y, train_size=.8, test_size=.2, shuffle=True)

# determine the weight of each class

class_weights = class_weight.compute_class_weight('balanced',

np.unique(y_train),

y_train)

class_weights = {i:class_weights[i] for i in range(2)}

y_train = tf.keras.utils.to_categorical(y_train, num_classes)

y_test = tf.keras.utils.to_categorical(y_test, num_classes)

model = Sequential()

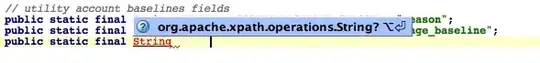

model.add(Conv1D(256,kernel_size=3,activation='relu', input_shape =(1680, 1)))

# model.add(Dropout(.5))

model.add(Flatten())

model.add(Dense(200, activation='relu'))

model.add(Dense(50, activation='relu'))

model.add(Dense(2, activation='softmax'))

model.compile(loss='mse',

optimizer='sgd',

metrics= ['acc'])

## use it when you want to apply weight of the classes

history = model.fit(x_train, y_train,class_weight=class_weights, validation_split = 0.3, epochs = epochs, verbose = 1)

#model.fit(x_train, y_train,batch_size=32,validation_split = 0.1, epochs = epochs, verbose = 1)

import matplotlib.pyplot as plt

history_dict = history.history

history_dict.keys()

loss_values = history_dict['loss']

val_loss_values = history_dict['val_loss']

acc = history_dict.get('acc')

epochs = range(1, len(acc) + 1)

plt.plot(epochs, loss_values, 'r--', label = 'Training loss')

plt.plot(epochs, val_loss_values, 'b', label = 'Validation_loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

acc_values = history_dict['acc']

val_acc_values = history_dict['val_acc']

plt.plot(epochs, acc, 'r--', label = 'Training acc')

plt.plot(epochs, val_acc_values, 'b', label = 'Validation acc')

plt.title('Training and Validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('accuracy')

plt.legend()

plt.show()

model.summary()

test_loss, test_acc = model.evaluate(x_test, y_test)

print('test_acc:', test_acc)