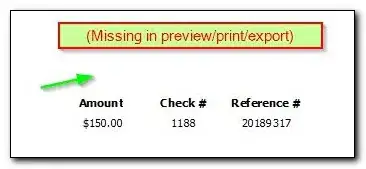

I got output like below after stitching result of 24 stitched images to next 25th image. Before that stitching was good.

Is anyone aware of why/when output of stitching comes like this? What are the possibilities of output coming like that? What may be the reason of that?

Stitching code is following standard stitching steps like finding keypoints, descriptors then matching points, calculating homography and then warping of images. But I am not understanding why that output is coming.

Core part of stitching is like below:

detector = cv2.SIFT_create(400)

# find the keypoints and descriptors with SIFT

gray1 = cv2.cvtColor(image1,cv2.COLOR_BGR2GRAY)

ret1, mask1 = cv2.threshold(gray1,1,255,cv2.THRESH_BINARY)

kp1, descriptors1 = detector.detectAndCompute(gray1,mask1)

gray2 = cv2.cvtColor(image2,cv2.COLOR_BGR2GRAY)

ret2, mask2 = cv2.threshold(gray2,1,255,cv2.THRESH_BINARY)

kp2, descriptors2 = detector.detectAndCompute(gray2,mask2)

keypoints1Im = cv2.drawKeypoints(image1, kp1, outImage = cv2.DRAW_MATCHES_FLAGS_DEFAULT, color=(0,0,255))

keypoints2Im = cv2.drawKeypoints(image2, kp2, outImage = cv2.DRAW_MATCHES_FLAGS_DEFAULT, color=(0,0,255))

# BFMatcher with default params

matcher = cv2.BFMatcher()

matches = matcher.knnMatch(descriptors2,descriptors1, k=2)

# Apply ratio test

good = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good.append(m)

print (str(len(good)) + " Matches were Found")

if len(good) <= 10:

return image1

matches = copy.copy(good)

matchDrawing = util.drawMatches(gray2,kp2,gray1,kp1,matches)

#Aligning the images

src_pts = np.float32([ kp2[m.queryIdx].pt for m in matches ]).reshape(-1,1,2)

dst_pts = np.float32([ kp1[m.trainIdx].pt for m in matches ]).reshape(-1,1,2)

H = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC,5.0)[0]

h1,w1 = image1.shape[:2]

h2,w2 = image2.shape[:2]

pts1 = np.float32([[0,0],[0,h1],[w1,h1],[w1,0]]).reshape(-1,1,2)

pts2 = np.float32([[0,0],[0,h2],[w2,h2],[w2,0]]).reshape(-1,1,2)

pts2_ = cv2.perspectiveTransform(pts2, H)

pts = np.concatenate((pts1, pts2_), axis=0)

# print("pts:", pts)

[xmin, ymin] = np.int32(pts.min(axis=0).ravel() - 0.5)

[xmax, ymax] = np.int32(pts.max(axis=0).ravel() + 0.5)

t = [-xmin,-ymin]

Ht = np.array([[1,0,t[0]],[0,1,t[1]],[0,0,1]]) # translate

result = cv2.warpPerspective(image2, Ht.dot(H), (xmax-xmin, ymax-ymin))

resizedB = np.zeros((result.shape[0], result.shape[1], 3), np.uint8)

resizedB[t[1]:t[1]+h1,t[0]:w1+t[0]] = image1

# Now create a mask of logo and create its inverse mask also

img2gray = cv2.cvtColor(result,cv2.COLOR_BGR2GRAY)

ret, mask = cv2.threshold(img2gray, 0, 255, cv2.THRESH_BINARY)

kernel = np.ones((5,5),np.uint8)

k1 = (kernel == 1).astype('uint8')

mask = cv2.erode(mask, k1, borderType=cv2.BORDER_CONSTANT)

mask_inv = cv2.bitwise_not(mask)

difference = cv2.bitwise_or(resizedB, resizedB, mask=mask_inv)

result2 = cv2.bitwise_and(result, result, mask=mask)

result = cv2.add(result2, difference)

Edit:

This image shows match drawing while stitching 25 to result until 24 images:

And before that match drawing:

I have total 97 images to stitch. If I stitch 24 and 25 image separately they stitches properly. If I start stitching from 23rd image onwards then also stitching is good but it gives me problem when I stitches starting from 1st image. I am not able to understand the problem.

Result after stitching 23rd image:

Result after stitching 24th image:

Result after stitching 25th image is as above which went wrong.

Strange Observation: If I stitch 23,24,25 images seperately with same code it gets stitches. If I stitch images after 23 till 97 , it gets stitches. But somehow if I stitch images from 1st, it breaks while stitching 25th image. I am not understanding why this happens.

I have tried different combination like different keypoint detection, extraction methods, matching methods, different homography calculations, different warping code but those combinations didn't work. Something is missing or wrong in the steps combination code. I am not able to figure it out.

Sorry for this long question. As I am completely new to this I am not able to explain and get the things properly. Thanks for your help and guidance.

Stitched result of 23,24,25 images separately with SAME code:

With different code (gives black lines in between stitching), if I stitched 97 images then 25th goes up in stitching and stitches as shown below (right corner point):