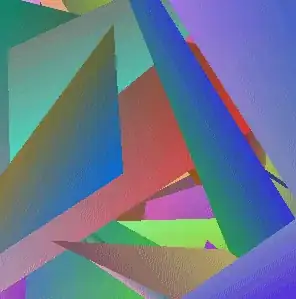

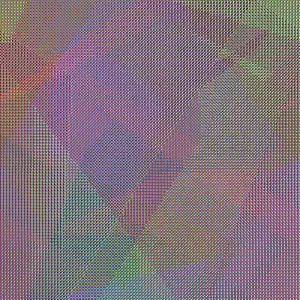

I wish to capture an image I rendered rendered in openGL. I use glReadPixels and then save the image with CImg. Unfortunately, the result is wrong. See below. The image on the left is correct. I captured it with GadWin PrintScreen. The image on the right is incorrect. I created it with glReadPixels and CImg:

I've done a lot of Web research on what might be wrong, but I'm out of avenues to pursue. Here the code the captures the image:

void snapshot() {

int width = glutGet(GLUT_WINDOW_WIDTH);

int height = glutGet(GLUT_WINDOW_HEIGHT);

glPixelStorei(GL_PACK_ROW_LENGTH, 0);

glPixelStorei(GL_PACK_SKIP_PIXELS, 0);

glPixelStorei(GL_PACK_SKIP_ROWS, 0);

glPixelStorei(GL_PACK_ALIGNMENT, 1);

int bytes = width*height*3; //Color space is RGB

GLubyte *buffer = (GLubyte *)malloc(bytes);

glFinish();

glReadPixels(0, 0, width, height, GL_RGB, GL_UNSIGNED_BYTE, buffer);

glFinish();

CImg<GLubyte> img(buffer,width,height,1,3,false);

img.save("ScreenShot.ppm");

exit(0);

}

Here is where I call the snapshot method:

void display(void) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

drawIndividual(0);

snapshot();

glutSwapBuffers();

}

Following up on a comment, I grabbed the bit depth and printed to the console. Here are the results:

redbits=8

greenbits=8

bluebits=8

depthbits=24