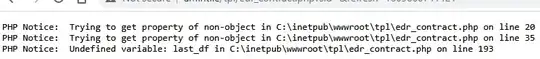

The best time I get is 8 seconds, where fast-json-stringify give me more than 10 seconds boost over 300k records:

'use strict'

// run fresh mongo

// docker run --name temp --rm -p 27017:27017 mongo

const fastify = require('fastify')({ logger: true })

const fjs = require('fast-json-stringify')

const toString = fjs({

type: 'object',

properties: {

playerId: { type: 'integer' },

name: { type: 'string' },

surname: { type: 'string' },

shirtNumber: { type: 'integer' },

}

})

fastify.register(require('fastify-mongodb'), {

forceClose: true,

url: 'mongodb://localhost/mydb'

})

fastify.get('/', (request, reply) => {

const dataStream = fastify.mongo.db.collection('foo')

.find({}, {

limit: 300000,

projection: { playerId: 1, name: 1, surname: 1, shirtNumber: 1, position: 1 }

})

.stream({

transform(doc) {

return toString(doc) + '\n'

}

})

reply.type('application/jsonl')

reply.send(dataStream)

})

fastify.get('/insert', async (request, reply) => {

const collection = fastify.mongo.db.collection('foo')

const batch = collection.initializeOrderedBulkOp();

for (let i = 0; i < 300000; i++) {

const player = {

playerId: i,

name: `Name ${i}`,

surname: `surname ${i}`,

shirtNumber: i

}

batch.insert(player);

}

const { result } = await batch.execute()

return result

})

fastify.listen(8080)

In any case, you should consider to:

- paginate your output

- or pushing the data into a bucket (like S3) and return to the client a URL to download the file directly, this will speed up a lot the process and will save your node.js process from this data streaming

Note that the compression in node.js is a heavy process, so it slows it down a lot the response. An nginx proxy adds it by default without the need to implement it in your business logic server.