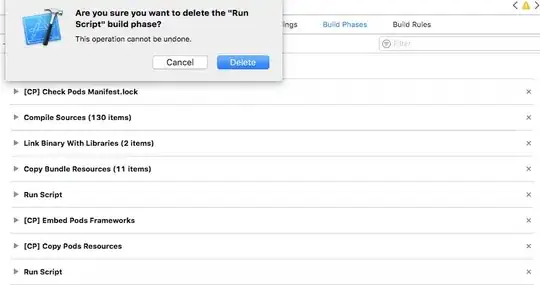

I've got a neural style transfer model. I'm currently working on trying to use different parts of an image to transfer different pictures. I'm wondering how can I get the model to just use the colours present in an image. Below is an example:

The picture above is the style image that I have gotten from using thresholding along with the original image. Now the transferred picture is below:

Obviously it's transferred some of the black parts of the image but I only want the non black colours present to be transferred. Below is my code for my model:

import torch

import torch.nn as nn

import torch.optim as optim

from PIL import Image

import torchvision.transforms as transforms

import torchvision.models as models

from torchvision.utils import save_image

class VGG(nn.Module):

def __init__(self):

super(VGG, self).__init__()

self.chosen_features = ["0", "5", "10", "19", "28"]

self.model = models.vgg19(pretrained=True).features[:29]

def forward(self, x):

# Store relevant features

features = []

for layer_num, layer in enumerate(self.model):

x = layer(x)

if str(layer_num) in self.chosen_features:

features.append(x)

return features

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def load_image(image_name):

image = Image.open(image_name)

image = loader(image).unsqueeze(0)

return image.to(device)

imsize = 384

loader = transforms.Compose(

[

transforms.Resize((imsize, imsize)),

transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

]

)

original_img = load_image("Content Image.jpg")

style_img = load_image("Adaptive Image 2.jpg")

# initialized generated as white noise or clone of original image.

# Clone seemed to work better for me.

generated = original_img.clone().requires_grad_(True)

# generated = load_image("20epoctom.png")

model = VGG().to(device).eval()

# Hyperparameters

total_steps = 10000

learning_rate = 0.001

alpha = 1

beta = 0.01

optimizer = optim.Adam([generated], lr=learning_rate)

for step in range(total_steps):

# Obtain the convolution features in specifically chosen layers

generated_features = model(generated)

original_img_features = model(original_img)

style_features = model(style_img)

# Loss is 0 initially

style_loss = original_loss = 0

# iterate through all the features for the chosen layers

for gen_feature, orig_feature, style_feature in zip(

generated_features, original_img_features, style_features

):

# batch_size will just be 1

batch_size, channel, height, width = gen_feature.shape

original_loss += torch.mean((gen_feature - orig_feature) ** 2)

# Compute Gram Matrix of generated

G = gen_feature.view(channel, height * width).mm(

gen_feature.view(channel, height * width).t()

)

# Compute Gram Matrix of Style

A = style_feature.view(channel, height * width).mm(

style_feature.view(channel, height * width).t()

)

style_loss += torch.mean((G - A) ** 2)

total_loss = alpha * original_loss + beta * style_loss

optimizer.zero_grad()

total_loss.backward()

optimizer.step()

if step % 500 == 0:

print(total_loss)

save_image(generated, f"Generated Pictures/{step//500} Iterations Generated Picture.png")

Any idea of where to potentially go as well would be appreciated!