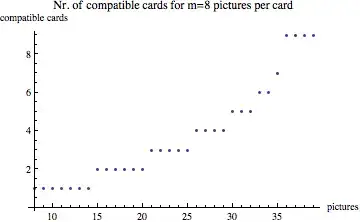

I made a random graph, and tried to use SciPy curve_fit to fit the best curve to the plot, but it fails.

First, I generated a random exponential decay graph, where A, w, T2 are randomly generated using numpy:

def expDec(t, A, w, T2):

return A * np.cos(w * t) * (2.718**(-t / T2))

Now I have SciPy guess the best fit curve:

t = x['Input'].values

hr = x['Output'].values

c, cov = curve_fit(bpm, t, hr)

Then I plot the curve

for i in range(n):

y[i] = bpm(x['Input'][i], c[0], c[1], c[2])

plt.plot(x['Input'], x['Output'])

plt.plot(x['Input'], y)

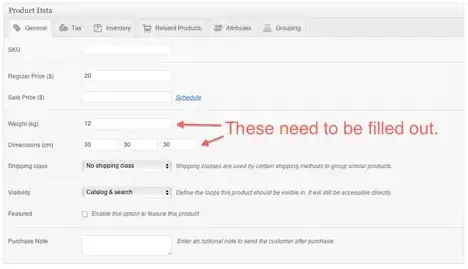

That's it. Here's how bad the fit looks:

If anyone can help, that would be great.

MWE (Also available interactively here)

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from scipy.optimize import curve_fit

inputs = []

outputs = []

# THIS GIVES THE DOMAIN

dom = np.linspace(-5, 5, 100)

# FUNCTION & PARAMETERS (RANDOMLY SELECTED)

A = np.random.uniform(3, 6)

w = np.random.uniform(3, 6)

T2 = np.random.uniform(3, 6)

y = A * np.cos(w * dom) * (2.718**(-dom / T2))

# DEFINES EXPONENTIAL DECAY FUNCTION

def expDec(t, A, w, T2):

return A * np.cos(w * t) * (2.718**(-t / T2))

# SETS UP FIGURE FOR PLOTTING

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

# PLOTS THE FUNCTION

plt.plot(dom, y, 'r')

# SHOW THE PLOT

plt.show()

for i in range(-9, 10):

inputs.append(i)

outputs.append(expDec(i, A, w, T2))

# PUT IT DIRECTLY IN A PANDAS DATAFRAME

points = {'Input': inputs, 'Output': outputs}

x = pd.DataFrame(points, columns = ['Input', 'Output'])

# FUNCTION WHOSE PARAMETERS PROGRAM SHOULD BE GUESSING

def bpm(t, A, w, T2):

return A * np.cos(w * t) * (2.718**(-t / T2))

# INPUT & OUTPUTS

t = x['Input'].values

hr = x['Output'].values

# USE SCIPY CURVE FIT TO USE NONLINEAR LEAST SQUARES TO FIND BEST PARAMETERS. TRY 1000 TIMES BEFORE STOPPING.

constants = curve_fit(bpm, t, hr, maxfev=1000)

# GET CONSTANTS FROM CURVE_FIT

A_fit = constants[0][0]

w_fit = constants[0][1]

T2_fit = constants[0][2]

# CREATE ARRAY TO HOLD FITTED OUTPUT

fit = []

# APPEND OUTPUT TO FIT=[] ARRAY

for i in range(-9,10):

fit.append(bpm(i, A_fit, w_fit, T2_fit))

# PLOTS BEST PARAMETERS

plt.plot(x['Input'], x['Output'])

plt.plot(x['Input'], fit, "ro-")