-EDIT-

This simple example just shows 3 records but I need to do this for billions of records so I need to use a Pandas UDF rather than just converting the Spark DF to a Pandas DF and using a simple apply.

Input Data

Desired Output

-END EDIT-

I've been banging my head against a wall trying to solve this and I'm hoping someone can help me with this. I'm trying to convert latitude / longitude values in a PySpark dataframe to a Uber's H3 hex system. This is a pretty straightforward use of the function h3.geo_to_h3(lat=lat, lng=lon, resolution=7). However I keep having issues with my PySpark Cluster.

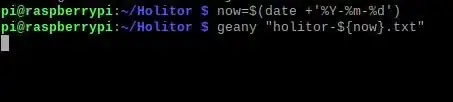

I'm setting up my PySpark cluster as described in the databricks article here, using the following commands:

conda create -y -n pyspark_conda_env -c conda-forge pyarrow pandas h3 numpy python=3.7 conda-packconda init --allthen closing and reopening the terminal windowconda activate pyspark_conda_envconda pack -f -o pyspark_conda_env.tar.gz

I include the tar.gz file I created when creating my spark cluster in my jupyter notebook like so spark = SparkSession.builder.master("yarn").appName("test").config("spark.yarn.dist.archives","<path>/pyspark_conda_env.tar.gz#environment").getOrCreate()

I have my pandas udf set up like this which I was able to get working on a single node spark cluster but am now having trouble on a cluster with multiple worker nodes:

#create udf to convert lat lon to h3 hex

def convert_to_h3(lat : pd.Series, lon : pd.Series) -> pd.Series:

import h3 as h3

import numpy as np

if ((None in [lat, lon]) | (np.isnan(lat))):

return None

else:

return (h3.geo_to_h3(lat=lat, lng=lon, resolution=7))

@f.pandas_udf('string', f.PandasUDFType.SCALAR)

def udf_convert_to_h3(lat : pd.Series, lon : pd.Series) -> pd.Series:

import pandas as pd

import numpy as np

df = pd.DataFrame({'lat' : lat, 'lon' : lon})

df['h3_res7'] = df.apply(lambda x : convert_to_h3(x['lat'], x['lon']), axis = 1)

return df['h3_res7']

After creating the new column with the pandas udf and trying to view it:

trip_starts = trip_starts.withColumn('h3_res7', udf_convert_to_h3(f.col('latitude'), f.col('longitude')))

I get the following error:

21/07/15 20:05:22 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Requesting driver to remove executor 139 for reason Container marked as failed: container_1626376534301_0015_01_000158 on host: ip-xx-xxx-xx-xxx.aws.com. Exit status: -100. Diagnostics: Container released on a *lost* node.

I'm not sure what to do here as I've tried scaling down the number of records to a more manageable number and am still running into this issue. Ideally I would like to figure out how to use the PySpark environments as described in the databricks blog post I linked rather than running a bootstrap script when spinning up the cluster due to company policies making bootstrap scripts more difficult to run.