I have a tab delimited file that is saved as a .txt with " " around the string variables. The file can be found here.

I am trying to read it into Spark-R (version 3.1.2), but cannot successfully bring it into the environment. I've tried variations of the read.df code, like this:

df <- read.df(path = "FILE.txt", header="True", inferSchema="True", delimiter = "\t", encoding="ISO-8859-15")

df <- read.df(path = "FILE.txt", source = "txt", header="True", inferSchema="True", delimiter = "\t", encoding="ISO-8859-15")

I have had success with bringing in CSVs with read.csv, but many of the files I have are over 10GB, and is not practical to convert them to CSV before bring them into Spark-R.

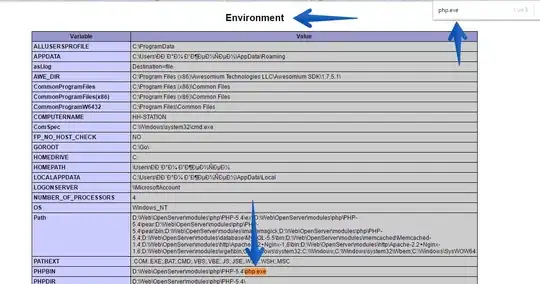

EDIT: When I run read.df I get a laundry list of errors, starting with this:

I am able to bring in csv files used in a previous project with both read.df and read.csv, so I don't think it's a java issue.