We have a scheduled cleanup job that removes records that have a timestamp in the past. We select them in batches of 10’000 and delete them by primary key (composite key integer and timestamp). This job might delete up to 200’000 records within a few seconds.

We can consistently replicate that immediately after running this job the CPU load on all the readers goes to 100% (and therefore blocks the application). We can also consistently see that running VACUUM brings the load back to normal, immediately.

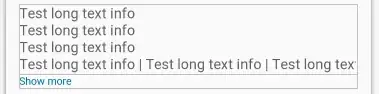

CPU load: batch-delete and VACUUM:

Is this a known issue? Anything we can do to avoid the 100% CPU spike?

Setup

- Aurora (AWS) postgreSQL 12.6 cluster

- 1 writer, 2 readers (db.r5.2xlarge)

- Database

- DB size: 50 GB

- Index size: 30 GB

- One table:

- Primary key is a composite key (integer, timestamp)

- Additional index on the timestamp column (in order to select records in the past)

- Load

- CPU on writer: 15%-25%

- CPU on readers: 5%

- Commit throughput

- 300 count / seconds on the writer

- 300 count / seconds on each reader

Auto-Vaccum settings:

| Name | Value |

|---|---|

| autovacuum | on |

| log_autovacuum_min_duration | 10s |

| autovacuum_max_workers | 3 |

| autovacuum_naptime | 5s |

| autovacuum_vacuum_threshold | 50 |

| autovacuum_analyze_threshold | 50 |

| autovacuum_vacuum_scale_factor | 0.1 |

| autovacuum_analyze_scale_factor | 0.05 |

| autovacuum_freeze_max_age | 200000000 |

| autovacuum_vacuum_cost_delay | 5ms |

| autovacuum_vacuum_cost_limit | 1200 |