i have a feedforward regression network (in Keras with TensorFlow backend) with single hidden layer (30 neurons) and output layer with 2 neurons (for Imaginary and Real parts of complex signal) ...My question is how the MSE loss is calculated exactly ? since i am getting only one number in "history object" for each epoch. Eventually i would like to extract separate loss number per output neuron each epoch, is it possible in Keras ?

Asked

Active

Viewed 649 times

1 Answers

0

Losses are calculated for every batch pass and those are then averaged into an epoch loss which is the number you are given.

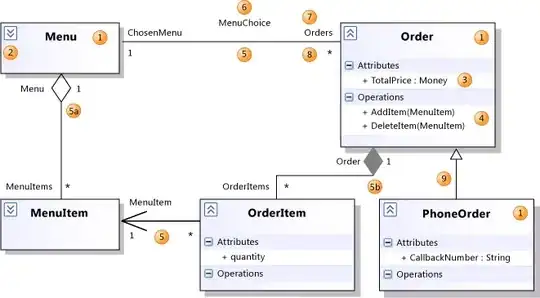

If you want to calculate loss for output neurons separately I think you will have to split your output layer into two, see image below for illustration. You can then assign a loss function for both outputs and you will have access to loss values of both neurons. Note that you will have to split your ground truth into two values as you now have two outputs instead of one.

code could look like this:

inputs = x = tf.keras.layers.Input(input_shape)

x = tf.keras.layers.Dense(30)(x)

y1 = tf.keras.layers.Dense(1)(x)

y2 = tf.keras.layers.Dense(1)(x)

model = tf.keras.Model(inputs=inputs, outputs=[y1, y2])

loss = [tf.keras.losses.MeanSquaredError(), tf.keras.losses.MeanSquaredError()]

Dominik Ficek

- 544

- 5

- 18

-

Dominik thanks for an advise ... I have a model with that topology already and it indeed give me separate losses as i want , but it not suit for sequencing two models one after other ...For sequencing models you need single output layer with same dimension as the next models input layer – igorek Jul 08 '21 at 09:06

-

you can concatenate the outputs afterwards either using `numpy.concatenate` or if you want to make an end-to-end model you can use concat layer `tf.keras.layers.Concatenate` – Dominik Ficek Jul 08 '21 at 12:14

-

But it still give me only one loss value per epoch , since it single output layer – igorek Jul 08 '21 at 14:50

-

you can keep them as output layers – Dominik Ficek Jul 08 '21 at 15:00