I have some medium large CSV files (about 140mb) and I'm trying to turn them into an array of structs.

I don't want to to load the hole file in the memory so I'm using a steam reader.

For each line I read the data, turn the line into my struct and append the struct to the array. Because there are more then 5_000_000 lines in total, I used reserveCapacity to get a better memory management.

var dataArray : [inputData] = []

dataArray.reserveCapacity(5_201_014)

Unfortunately, that doesn't help at all. There is no perforce difference. The memory graph in the debug session rises up to 1.54 GB and then stays there. Im wondering what im doing wrong, because I can't imaging that it takes 1.54Gb of RAM to store an array of structs from a file with an original size of 140mb.

I use the following code to create the array:

var dataArray : [inputData] = []

dataArray.reserveCapacity(5_201_014)

let stream = StreamReader(path: "pathToDocument")

defer { stream!.close() }

while let line = stream!.nextLine() {

if line.isHeader() {} else {

let array = line.components(separatedBy: ",")

dataArray.append(inputData(a: Float32(array[0])!, b: Float32(array[1])!, c: Float32(array[2])!))

}

}

I know that here are several CSV reader packages on GitHub but they are extremely slow.

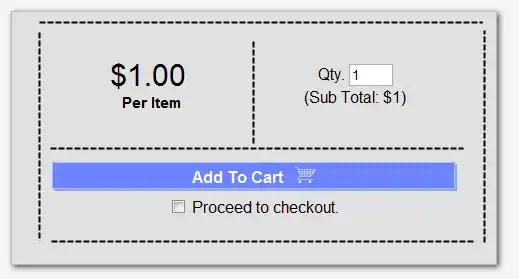

Here a screenshot of the debug session:

Thanks for any advice.