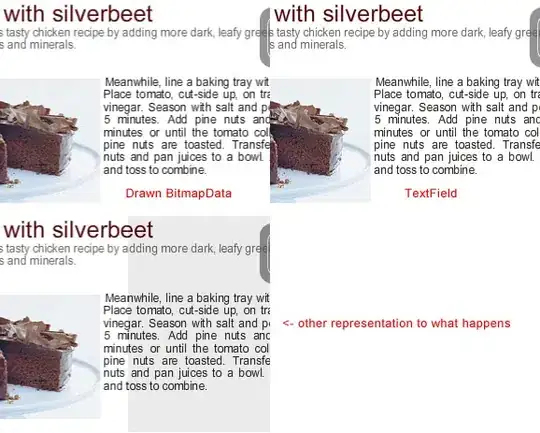

I try to read documents from various sources with Python. Therefore I am using OpenCV and Tesseract. To optimize the Tesseract performance, I do some preprocessing, but sadly the documents also vary in quality a lot. My current issue are documents that are only partially blurry or shaded, due to bad scans.

I have no influence on the document quality and manual feature detection is not applicable, because the code should run over hundred thousands of documents in the end and even inside a document the quality can vary strongly.

To get rid of the shadow, I found a technique with delating and blurring the image and dividing the original with the dilated version.

h, w = img.shape

kernel = np.ones((7, 7), np.uint8)

dilation = cv2.dilate(img, kernel, iterations=1)

blurred_dilation = cv2.GaussianBlur(dilation, (13, 13), 0)

resized = cv2.resize(blurred_dilation, (w, h))

corrected = img / resized * 255

That works very well.

But I still got that blur and optically it got worse to read. I'd like to do a binarisation next, but then would be nothing valuable left from the blurred parts.

I found an example of a deconvolution that works for motion blur, but I can only apply it to the whole image, which blurs the rest of the text, and I need to know the direction of the motion blur. So, I hope to get some help on how to optimize this kind of image, so that tesseract can properly read it.

I know that there should be further optimizations besides sharpening the blurred text. Deskewing and getting rid of the fragments of another pages. These I am not sure about the proper sequence how to perform these additional steps.

I can hardly find sources or tutorials for plain document optimization for OCR processes. Often the procedures apply globally to the whole image or are for non OCR applications.