Have to ingest a file with new column into a existing table structure.

create table sch.test (

name string ,

address string

) USING DELTA

--OPTIONS ('mergeSchema' 'true')

PARTITIONED BY (name)

LOCATION '/mnt/loc/fold'

TBLPROPERTIES (delta.autoOptimize.optimizeWrite = true, delta.autoOptimize.autoCompact = true);

Code to read the file: val df = spark.read .format("com.databricks.spark.csv") .option("header", "true") .load("/mnt/loc/fold")

display(df)

File in path contains below data

name,address

raghu,india

raj,usa

On writing it to a table,

import org.apache.spark.sql.functions._

df.withColumn("az_insert_ts", current_timestamp())

.withColumn("exec_run_id",lit("233"))

.withColumn("az_inp_file_name",lit("24234filename"))

.coalesce(12)

.write

.mode("append")

.option("mergeSchema", "true")

.format("delta")

.saveAsTable("sch.test")

display(spark.read.table("sch.test"))

Adding a new column,

name,address,age

raghu,india,12

raj,usa,13

Read the file,

val df = spark.read

.format("com.databricks.spark.csv")

.option("header", "true")

.load("/mnt/loc/fold")

display(df)

While writing into the table using insertInto,

import org.apache.spark.sql.functions._

df.withColumn("az_insert_ts", current_timestamp())

.withColumn("exec_run_id",lit("233"))

.withColumn("az_inp_file_name",lit("24234filename"))

.coalesce(12)

.write

.mode("append")

.option("mergeSchema", "true")

.format("delta")

.insertInto("sch.test")

display(spark.read.table("sch.test"))

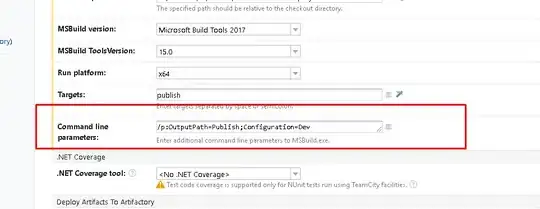

Getting the below error,