sklearn's train_test_split , StratifiedShuffleSplit and StratifiedKFold all stratify based on class labels (y-variable or target_column). What if we want to sample based on features columns (x-variables) and not on target column. If it was just one feature it would be easy to stratify based on that single column, but what if there are many feature columns and we want to preserve the population's proportions in the selected sample?

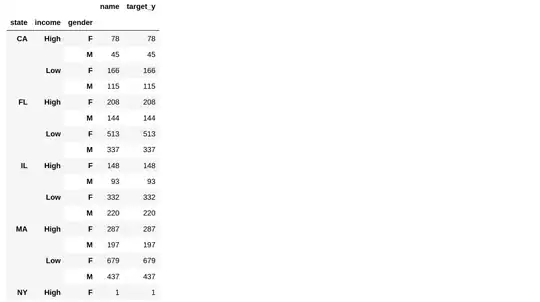

Below I created a df which has skewed population with more people of low income, more females, least people from CA and most people from MA. I want the selected sample to have these characteristics i.e. more people of low income, more females, least people from CA and most people from MA

import random

import string

import pandas as pd

N = 20000 # Total rows in data

names = [''.join(random.choices(string.ascii_uppercase, k = 5)) for _ in range(N)]

incomes = [random.choices(['High','Low'], weights=(30, 70))[0] for _ in range(N)]

genders = [random.choices(['M','F'], weights=(40, 60))[0] for _ in range(N)]

states = [random.choices(['CA','IL','FL','MA'], weights=(10,20,30,40))[0] for _ in range(N)]

targets_y= [random.choice([0,1]) for _ in range(N)]

df = pd.DataFrame(dict(

name = names,

income = incomes,

gender = genders,

state = states,

target_y = targets_y

))

One more complexity arises when for some of the characteristics, we have very few examples and we want to include atleast n examples in selected sample. Consider this example:

single_row = {'name' : 'ABC',

'income' : 'High',

'gender' : 'F',

'state' : 'NY',

'target_y' : 1}

df = df.append(single_row, ignore_index=True)

df

.

I want this single added row to be always included in test-split (n=1 here).