I have a list of lists (sub-lists) where each sub-list is a set of characters. The list came from a text fie of data with consecutive sample data aligned vertically. Here is a sample:

""

"Test Method","Generic Stuff.msm"

"Sample I. D.","sed do eiusmod tempor incididunt ut labore et.mss"

"Specimen Number","1"

"Load (lbf)","Time (s)","Extension (in)"

48.081,3.150,0.000

77.307,3.200,0.000

98.159,3.250,0.000

53.256,3.300,0.000

42.476,3.350,0.000

67.080,3.400,0.000

17.786,3.450,0.000

82.600,3.500,0.001

50.644,3.550,0.001

97.122,3.600,0.001

/n

/n

All samples are separated with a double quotation mark and end with a couple new lines.

Pandas does a great job of allowing me to convert those characters into floats or leave them as strings. So, I decided to put the sublists through a for-loop, adjust where the data actually starts (the same headers exist for each specimen), and try to add each sample to its own SHEET in a single Excel WORKBOOK.

Here is the code:

source_file = r'input.txt'

base = os.path.splitext(source_file)[0]

excel_file = base + ".xlsx"

with open(excel_file, 'w') as fp:

workbook = openpyxl.Workbook()

initial_work_sheet = workbook.active

initial_work_sheet.title = 'Create WorkBook'

initial_work_sheet['A1'] = "Do With This Sheet As You Please"

workbook.save(excel_file)

with open(source_file, 'r') as file:

data = file.read().split('""')

data = [i.split('\n') for i in data]

data.remove([''])

for i in np.arange(len(data)):

data[i] = list(filter(None, data[i]))

source_WB = openpyxl.load_workbook(excel_file)

for sub_data in data:

sub_data = [s.split(',') for s in sub_data][2:]

df = pd.DataFrame(sub_data[2:], columns=sub_data[1])

df['"Load (lbf)"'] = df['"Load (lbf)"'].astype(float)

df['"Time (s)"'] = df['"Time (s)"'].astype(float)

df['"Extension (in)"'] = df['"Extension (in)"'].astype(float)

source_WB.create_sheet(' '.join(sub_data[0]))

print("Writing ", ' '.join(sub_data[0]))

if ' '.join(sub_data[0]) in source_WB.sheetnames:

ws = source_WB[' '.join(sub_data[0])]

else:

ws = source_WB.active

for r in dataframe_to_rows(df, index=False, header=True):

ws.append(r)

source_WB.save(excel_file)

Initially, I tried to load the entire workbook every time it looped, but that prove to consume too much memory. I then ended with optimized modes of openpyxl, but even then it slows down significantly. The "data" list is about 30,000+ lines long with 3 columns.

Using the optimized mode of openpyxl, I was hoping to copy data from a "read_only" excel file to a "write_only" excel file as recommedend in the docs. I then save the "write_only" excel file over the "read_only" file, which acts as the source for the next loop around.

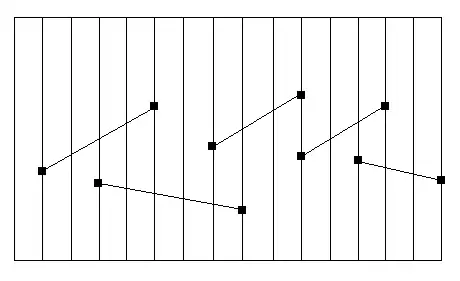

Aside from the significant slow down after the tenth sample, or so, all data is aligned in a single column for all samples except the last, which has all the data in 3 columns, as intended.

I've looked as much as I know how to ask search engines, but still can't find a good fit for what I am doing.

I have a lot of data, I need to get it into excel, how do I do that quickly? Thanks.