I have installed MySQL 8.0.25 on top of Ubuntu 20.04, running on the C5.2xlarge instance.

Then I ran a script that fills 10 tables with data. The test took exactly 2 hours, during which is has created 123146.5MB of data:

That means that on average, 17.1MB/s were written to the database.

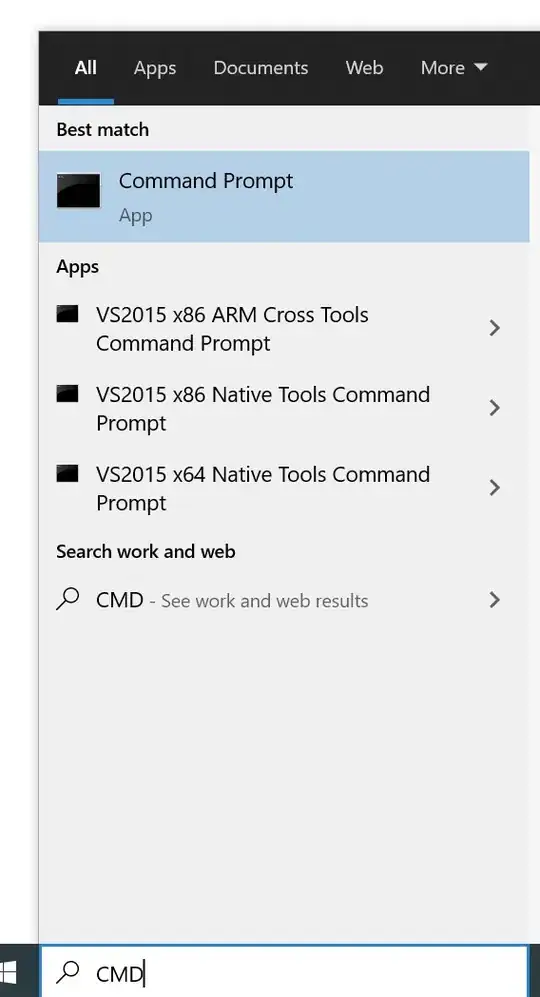

However, atop is reporting something weird: while it shows disk activity around 18-19MB/s, it also shows that the process mysqld wrote 864MB in the 10 second sample - which translates to 86.4MB/s, about 5 times as much as the amount of data actually committed to the database:

Why such disrepancy?

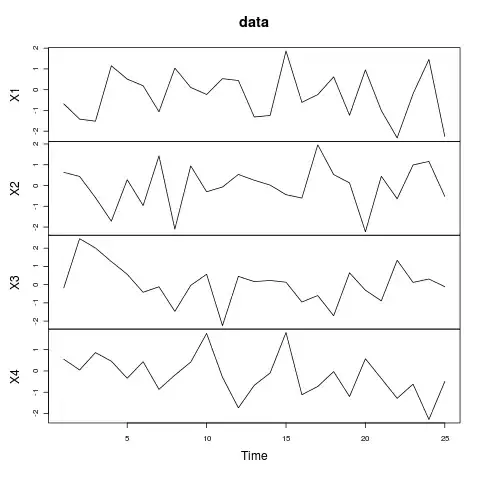

iotop is also typically showing that MySQL is writing 5x:

I also tried to use pt-diskstats from the Percona toolkit, but it didn't show anything...

I also reproduced the issue on RDS. In both cases (EC2 and RDS), the Cloudwatch statistics also show 5x writes...

The database has 10 tables that were filled. 5 of them have this definition:

CREATE TABLE `shark` (

`height` int DEFAULT NULL,

`weight` int DEFAULT NULL,

`name` mediumtext,

`shark_id` bigint NOT NULL,

`owner_id` bigint DEFAULT NULL,

PRIMARY KEY (`shark_id`),

KEY `owner_id` (`owner_id`),

CONSTRAINT `shark_ibfk_1` FOREIGN KEY (`owner_id`) REFERENCES `shark_owners` (`owner_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

another 5 tables have this definition:

CREATE TABLE `shark_owners` (

`name` mediumtext,

`owner_id` bigint NOT NULL,

PRIMARY KEY (`owner_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

I could understand if the difference was about 2x - data is first written to a transaction log, and then it is committed to the database, but 5x? Is this a normal behavior for MySQL, or is something in my tables triggering this? And why are there so many "cancelled writes" - about 12% ?