Try it this way.

# import necessary modules

import pandas as pd, numpy as np, matplotlib.pyplot as plt, time

from sklearn.cluster import DBSCAN

from sklearn import metrics

from geopy.distance import great_circle

from shapely.geometry import MultiPoint

# define the number of kilometers in one radian

kms_per_radian = 6371.0088

# load the data set

df = pd.read_csv('C:\\travel-gps-full.csv', encoding = "ISO-8859-1")

df.head()

# how many rows are in this data set?

len(df)

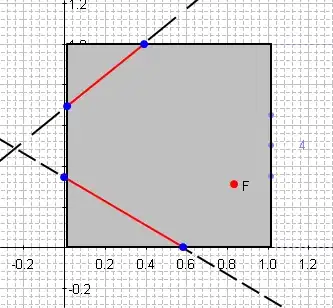

# scatterplot it to get a sense of what it looks like

df = df.sort_values(by=['lat', 'lon'])

ax = df.plot(kind='scatter', x='lon', y='lat', alpha=0.5, linewidth=0)

# represent points consistently as (lat, lon)

# coords = df.as_matrix(columns=['lat', 'lon'])

df_coords = df[['lat', 'lon']]

# coords = df.to_numpy(df_coords)

# define epsilon as 10 kilometers, converted to radians for use by haversine

epsilon = 10 / kms_per_radian

start_time = time.time()

db = DBSCAN(eps=epsilon, min_samples=10, algorithm='ball_tree', metric='haversine').fit(np.radians(df_coords))

cluster_labels = db.labels_

unique_labels = set(cluster_labels)

# get the number of clusters

num_clusters = len(set(cluster_labels))

# get colors and plot all the points, color-coded by cluster (or gray if not in any cluster, aka noise)

fig, ax = plt.subplots()

colors = plt.cm.rainbow(np.linspace(0, 1, len(unique_labels)))

# for each cluster label and color, plot the cluster's points

for cluster_label, color in zip(unique_labels, colors):

size = 150

if cluster_label == -1: #make the noise (which is labeled -1) appear as smaller gray points

color = 'gray'

size = 30

# plot the points that match the current cluster label

# X.iloc[:-1]

# df.iloc[:, 0]

x_coords = df_coords.iloc[:, 0]

y_coords = df_coords.iloc[:, 1]

ax.scatter(x=x_coords, y=y_coords, c=color, edgecolor='k', s=size, alpha=0.5)

ax.set_title('Number of clusters: {}'.format(num_clusters))

plt.show()

coefficient = metrics.silhouette_score(df_coords, cluster_labels)

print('Silhouette coefficient: {:0.03f}'.format(metrics.silhouette_score(df_coords, cluster_labels)))

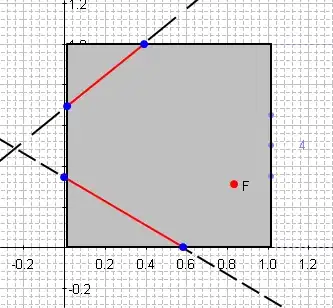

# set eps low (1.5km) so clusters are only formed by very close points

epsilon = 1.5 / kms_per_radian

# set min_samples to 1 so we get no noise - every point will be in a cluster even if it's a cluster of 1

start_time = time.time()

db = DBSCAN(eps=epsilon, min_samples=1, algorithm='ball_tree', metric='haversine').fit(np.radians(df_coords))

cluster_labels = db.labels_

unique_labels = set(cluster_labels)

# get the number of clusters

num_clusters = len(set(cluster_labels))

# all done, print the outcome

message = 'Clustered {:,} points down to {:,} clusters, for {:.1f}% compression in {:,.2f} seconds'

print(message.format(len(df), num_clusters, 100*(1 - float(num_clusters) / len(df)), time.time()-start_time))

# Result:

Silhouette coefficient: 0.854

Clustered 1,759 points down to 138 clusters, for 92.2% compression in 0.17 seconds

coefficient = metrics.silhouette_score(df_coords, cluster_labels)

print('Silhouette coefficient: {:0.03f}'.format(metrics.silhouette_score(df_coords, cluster_labels)))

# number of clusters, ignoring noise if present

num_clusters = len(set(cluster_labels)) #- (1 if -1 in labels else 0)

print('Number of clusters: {}'.format(num_clusters))

# Result:

Number of clusters: 138

# create a series to contain the clusters - each element in the series is the points that compose each cluster

clusters = pd.Series([df_coords[cluster_labels == n] for n in range(num_clusters)])

clusters.tail()

data:

https://github.com/gboeing/2014-summer-travels/tree/master/data

sample code:

https://geoffboeing.com/2014/08/clustering-to-reduce-spatial-data-set-size/