I have to read 10 files from a folder in blob container with different schema(most of the schema among the table macthes) and merge them into a single SQL table file 1: lets say there are 25 such columns

file 2: Some of the column in file2 matches with columns in file1

file 3:

output: a sql table

How to setup a pipeline in azure data factory to merge these columns into a single SQL table.

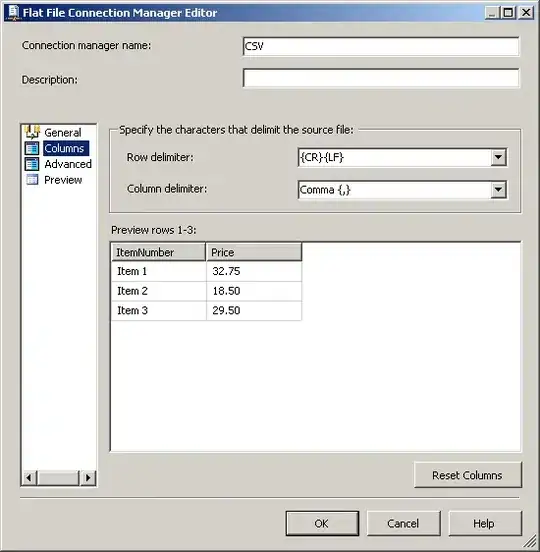

my approach: get Metadata Activity---> for each childitems--- copy activity

for the mapping--- i constructed a json that containes all the source/sink columns from these files