Question:

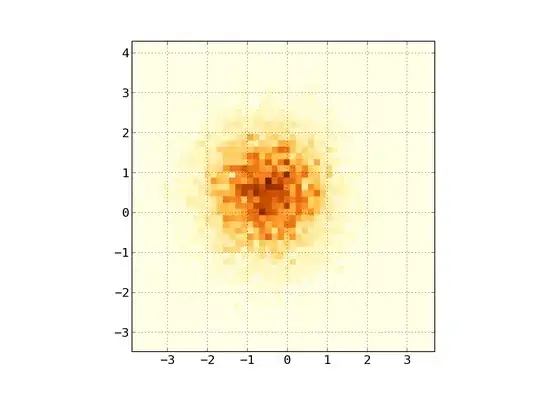

I am trying to do spectrum analysis on long time series data (see example for data structure, it is basically 1d data with a time index). To save time and memory etc. I want to do this parallel and lazily (using xarray and / or dask).

What would be the best (or a better) way to do this?

What I tried:

(see below for examples & code)

using scipy.signal.stft with xr.apply_ufunc:

Problem: ValueError, only works if input data is 1 chunk, which does not work with large data.using using scipy.signal.stft with dask.array.from_delayed:

Problem: Output data is always 1 chunk which makes it hard to further work with the data. (rechunking afterwards overloads RAM)Intermediate (lazily) 2d transformation using xr.Dataset.rolling.construct. Here 1 dimension is time and the rows are the short time windows over which I do the fft ("rolling window").

with data: [1, 2, 3, 4, 5] and a rolling window of 3 this would become:

time index rolling window 00:00:00 NaN, NaN, 1 00:00:01 NaN, 1, 2 00:00:02 1, 2, 3 00:00:03 2, 3, 4 00:00:04 3, 4, 5 Then using xr.apply ufunc to calculate the fft over each time point row (vectorized) over this new array, also lazily (see example below for specifics). This works and because it is lazily computed it works with big data sets too.

Issues:

- seems slower than scipy.signal.stft (see benchmarks below)

- overlap/stepsize can not be changed (as with noverlap in scipy stft).

- is the intermediate step really needed or is there a way to immediately calculate the rolling window fft?

- Still overloads RAM in some cases

Other: I also tried other methods such as optimizing the function with numba (but that doesn't work well with np.fft.fft) but to keep this already long post short I only included the most promising methods I tried.

What can I do to do this, and / or increase performance of method 3 or use method 1 or 2, or something else to lazily and parallel using xarray / Dask?

Thanks!

Code / Examples:

imports & test dataset:

import xarray as xr

import dask.array as da

import dask

import numpy as np

from scipy import signal as ss

# variables

fs= 128 # rec frequency

_l = int(1e3) # data length

window = fs * 10 # window over which to do stft

# Create data

data = np.random.random(_l * fs)

data = xr.DataArray(da.from_array(data, name="x")).assign_coords({"dim_0":np.arange(_l * fs)})

data = data.chunk(chunks=_l/10 * fs) # create multiple chunks to simulate bigger data

data = data.to_dataset()

Method 1:

def stft(data,):

f, t, Zxx = ss.stft(data,

fs=fs,

nperseg=window,

noverlap= window - 1

)

return np.abs(Zxx)

data['fft'] = xr.apply_ufunc(stft,

data.x,

input_core_dims=[['dim_0']],

output_core_dims=[["f", "t"]], # define newly created output dims

dask="parallelized",

output_dtypes=['f8'],

output_sizes={"f": window / 2 + 1,

"t": data.x.size - 1},

)

output:

ValueError: dimension 'dim_0' on 0th function argument to apply_ufunc with dask='parallelized' consists of multiple chunks, but is also a core dimension. To fix, rechunk into a single dask array chunk along this dimension, i.e., ``.chunk({'dim_0': -1})``, but beware that this may significantly increase memory usage.

Method 2:

def stft1(x):

f, t, Zxx = ss.stft(x,

fs=fs,

nperseg=window,

noverlap=window - 1

)

return np.abs(Zxx)

da.from_delayed(dask.delayed(stft1)(data.x), shape=(window / 2 + 1, data.x.size - 1), meta="f8")

Method 3: (working but not optimal?)

def xr_make_fft(ds, par):

ds['roll_window'] = np.arange(window) # create dimension for rolling window

# FFT function to apply vectorized:

def xr_fft(x):

fft = np.fft.fft(x)[xr_fft.idx]

return np.abs(fft)

# make new parameter with rolling windows stacked

ds['FFT_window'] = ds[par].rolling(dim_0=window).construct("roll_window",)

# Calc FFT Freq domain

fftfreq = np.fft.fftfreq(window, 1 / fs)

idx = (0 < fftfreq)

freq = fftfreq[idx]

ds['Frequency'] = freq

xr_fft.idx = idx

ds[f'FFT'] = xr.apply_ufunc(xr_fft,

ds[f"FFT_window"],

vectorize=True,

input_core_dims=[["roll_window"]], # define input dim over which to vectorize (this dim in inserted completely)

output_core_dims=[["Frequency"]], # define newly created output dims

dask="parallelized",

output_dtypes=['f8'],

output_sizes={"Frequency": len(freq)},

)

ds = ds.drop(f'FFT_window').drop_dims('roll_window')

return ds

data = xr_make_fft(data, "x")

performance test on small dataset: 128e3 length data (largest dataset which did not overload RAM):

Method 2:

%timeit da.from_delayed(dask.delayed(stft1)(data.x), shape=(window / 2 + 1, data.x.size - 1), meta="f8").compute()

3.53 s ± 28.8 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

Method 3:

%timeit data.FFT.compute()

6.04 s ± 219 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

scipy.signal.stft

%timeit ss.stft(data.x, fs, nperseg=window, noverlap=window-1)[2]

1.87 s ± 8.28 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)