This sounds like an easy task, but I already spent hours on it. There're several posts with a similar headline, so let me describe my problem first.

I have H264 encoded video files, those files show records of a colonoscopy/gastroscopy.

During the examination, the exterminator can make some kind of screenshot. You can see this in the video because for round about one second the image is not moving, so a couple of frames show the "same". I'd like to know when those screenshots are made.

So in the first place I extracted the image of the video:

ffmpeg -hwaccel cuvid -c:v h264_cuvid -i '/myVideo.mkv' -vf "hwdownload,format=nv12" -start_number 0 -vsync vfr -q:v 1 '/myFrames/%05d.jpg'

This works just fine and the result is a folder with all the images in a high quality. The idea now is to compare image x and image x+1 (or + y) and see if they are the "same" and if so, a screenshot was taken.

If I take a look for those images, the images look really the same, I can not tell the difference, but the computer can.

Since those image have been compressed/encoded they have a loss. I guess depending of the key frame in the video encoding process, the difference between those "identical" images is sometimes 0 and sometimes "huge". So far the problem, time for a little code:

// init mPrev with last element

cv::Mat mPrev = cv::imread(imagePaths[imagePaths.size() - 1])(*rect).clone();

cvtColor(mPrev, mPrev, cv::COLOR_BGR2GRAY);

// remove smaller noise

cv::medianBlur(mPrev, mPrev, 5);

// create binary image, shows only light reflection (landmarks) everything else is to dark

mPrev = mPrev.setTo(0, mPrev < max);

mPrev = mPrev.setTo(255, mPrev >= max);

cv::Mat diff;

std::vector<int> screenShotVec;

for (int k = start; k < end; k++) {

cv::Mat mat = cv::imread(imagePaths[k])(*rect).clone();

cvtColor(mat, mat, cv::COLOR_BGR2GRAY);

// remove smaller noise

cv::medianBlur(mat, mat, medSize);

// create binary image, shows only light reflection (landmarks) everything else is to dark

mat = mat.setTo(0, mat < max);

mat = mat.setTo(255, mat >= max);

double d = cv::sum(mat)[0];

// if image is totally black, it is not a screenshot, since parts of interest always have light reflection

if (d > 0) {

// get difference of binary images

absdiff(mPrev, mat, diff);

// differences should be very small and easy to remove with median blur

cv::medianBlur(diff, diff, 9);

d = cv::sum(diff)[0];

// no difference, it is a screenshot

if (d == 0) {

screenShotVec.push_back(k);

}

}

//clone the mat for the next round

mPrev = mat.clone();

}

}

This was the code which worked the best so far. But it is not very stable, I have videos from many different endoscope processors, cameras and grabbers.

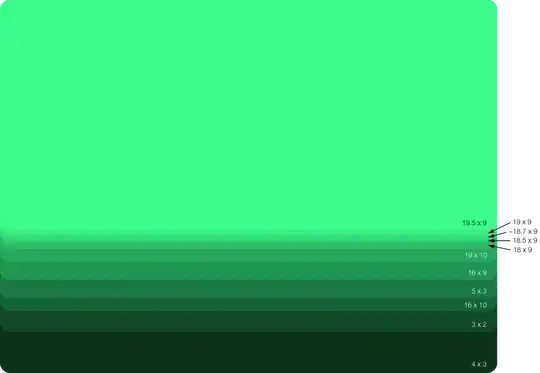

So I have to adjust it every time. For example this is the result if I "cv::substract" two pseudo identically frames:

while this is the result if I "cv::substract" two frames with very small movement of the camera:

The most of the time the camera is moving very fast and since I compare frame x with frame x+y (y >= 5) the differences are more obvious, the problem is when the camera is not moving fast. In addition to cv::substract I tried several kernel sizes for median, I tried to detect only edges with canny, and compared those or use the cv::norm function.

Does anyone has a recommendation, how I can transform or measure those pseudo identical frames to something which is identically while frames which are showing real changes remain distinguishable?

p.s. I am sorry I can't post the real images, since this is medical data