I'm creating a dataframe like so:

concatdatafile = pd.concat(datafile, axis=0, ignore_index=True, sort=False)

then checking some of the field data types before publish:

logger.info(" *** concatdatafile['FS Seal Time (sec)'].dtypes={}".format(concatdatafile['FS Seal Time (sec)'].dtypes))

logger.info(" *** concatdatafile['FS Cool Time (sec)'].dtypes={}".format(concatdatafile['FS Cool Time (sec)'].dtypes))

The next statement I have is a write:

response_wr = wr.s3.to_parquet(df=concatdatafile, path=s3_outputpath + 'full_data/', dataset=True,

partition_cols=["MachineId", "year_num", "month_num", "day_num"], database='myDB',

table='myDBTable', mode='append')

when I run this code in Glue, I get:

(Note: I cleared out the glue definition before running, so it would have fresh metadata)

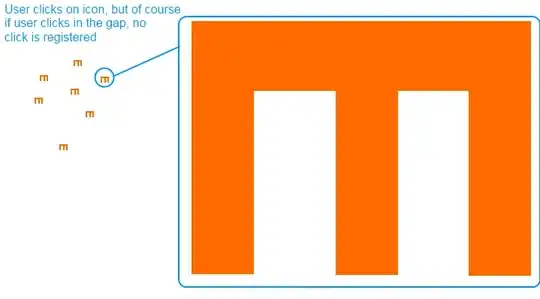

but in the Glue table, I'm seeing the fields changing types like so:

Question:...Why is it not respecting the data types I'm publishing? It sees the data looks like doubles (for now), but that's irrelevant. Later data will be strings, so I want it to not override the types I'm sending.