The project: for a list of meta-data of wordpress-plugins: - approx 50 plugins are of interest! but the challenge is: i want to fetch meta-data of all the existing plugins. What i subsequently want to filter out after the fetch is - those plugins that have the newest timestamp - that are updated (most) recently. It is all aobut acutality... so the base-url to start is this:

url = "https://wordpress.org/plugins/browse/popular/

aim: i want to fetch all the metadata of the plugins that we find on the first 50 pages of the popular-plugins.... for example...:

https://wordpress.org/plugins/wp-job-manager

https://wordpress.org/plugins/ninja-forms

https://wordpress.org/plugins/participants-database ....and so on and so forth.

here we go:

import requests

from bs4 import BeautifulSoup

from concurrent.futures.thread import ThreadPoolExecutor

url = "https://wordpress.org/plugins/browse/popular/{}"

def main(url, num):

with requests.Session() as req:

print(f"Collecting Page# {num}")

r = req.get(url.format(num))

soup = BeautifulSoup(r.content, 'html.parser')

link = [item.get("href")

for item in soup.findAll("a", rel="bookmark")]

return set(link)

with ThreadPoolExecutor(max_workers=20) as executor:

futures = [executor.submit(main, url, num)

for num in [""]+[f"page/{x}/" for x in range(2, 50)]]

allin = []

for future in futures:

allin.extend(future.result())

def parser(url):

with requests.Session() as req:

print(f"Extracting {url}")

r = req.get(url)

soup = BeautifulSoup(r.content, 'html.parser')

target = [item.get_text(strip=True, separator=" ") for item in soup.find(

"h3", class_="screen-reader-text").find_next("ul").findAll("li")[:8]]

head = [soup.find("h1", class_="plugin-title").text]

new = [x for x in target if x.startswith(

("V", "Las", "Ac", "W", "T", "P"))]

return head + new

with ThreadPoolExecutor(max_workers=50) as executor1:

futures1 = [executor1.submit(parser, url) for url in allin]

for future in futures1:

print(future.result())

that runs like so - but gives back some errors..(see below)

Extracting https://wordpress.org/plugins/use-google-libraries/

Extracting https://wordpress.org/plugins/blocksy-companion/

Extracting https://wordpress.org/plugins/cherry-sidebars/

Extracting https://wordpress.org/plugins/accesspress-social-share/Extracting https://wordpress.org/plugins/goodbye-captcha/

Extracting https://wordpress.org/plugins/wp-whatsapp/

here the traceback of the errors:

Some characters could not be decoded, and were replaced with REPLACEMENT CHARACTER.

Traceback (most recent call last):

File "C:\Users\rob\.spyder-py3\dev\untitled0.py", line 51, in <module>

print(future.result())

File "C:\Users\rob\devel\IDE\lib\concurrent\futures\_base.py", line 432, in result

return self.__get_result()

File "C:\Users\rob\devel\IDE\lib\concurrent\futures\_base.py", line 388, in __get_result

raise self._exception

File "C:\Users\rob\devel\IDE\lib\concurrent\futures\thread.py", line 57, in run

result = self.fn(*self.args, **self.kwargs)

File "C:\Users\rob\.spyder-py3\dev\untitled0.py", line 39, in parser

target = [item.get_text(strip=True, separator=" ") for item in soup.find(

AttributeError: 'NoneType' object has no attribute 'find_next'

Update: as mentioned above i am getting this AttributeError which says that NoneType has no attribute find_next. Below is the line that's giving the nasty problems.

target = [item.get_text(strip=True, separator=" ") for item in soup.find("h3", class_="screen-reader-text").find_next("ul").findAll("li")]

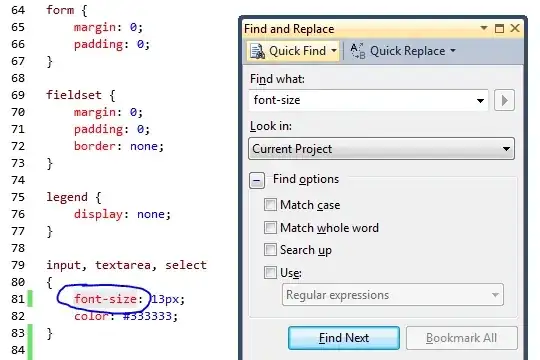

Specifically, the issue is in the soup.find() method, which can return either a Tag (when it finds something), which has a .find_next() method (i.e. attribute) or None (when it doesn't find anything), which doesn't. We can try extracting this whole call to its own variable, which we can then test.

tag = soup.find("h3", class_="screen-reader-text")

target = []

if tag:

lis = tag.find_next("ul").findAll("li")

target = [item.get_text(strip=True, separator=" ") for item in lis[:8]]

btw; we can use CSS selectors instead to get this running:

target = [item.get_text(strip=True, separator=" ") for item in soup.select("h3.screen-reader-text + ul li")[:8]]

This gets "all li anywhere under ul that's right next to h3 with the screen-reader-text class". If we want li directly under ul (which they would usually be anyway, but that's not always the case for other elements), we could use ul > li instead (the > means "direct child").

note: the best thing would be to dump all the results into a csv-file or - to print it out on screen.

look forward to hear from you