I am using SparkNLP from johnsnowlabs for extracting embeddings from my textual data, below is the pipeline. The size of the model is 1.8g after saving to hdfs

embeddings = BertSentenceEmbeddings.pretrained("labse", "xx") \

.setInputCols("sentence") \

.setOutputCol("sentence_embeddings")

nlp_pipeline = Pipeline(stages=[document_assembler, sentence_detector, embeddings])

pipeline_model = nlp_pipeline.fit(spark.createDataFrame([[""]]).toDF("text"))

I saved the pipeline_model into HDFS using pipeline_model.save("hdfs:///<path>").

The above was executed only one time

In another script, i am loading the stored pipeline from HDFS using pipeline_model = PretrainedPipeline.from_disk("hdfs:///<path>").

The above code loads the model but takes too much. I tested it on the spark local model ( no cluster ) but i had high resource 94g RAM, 32 Cores.

Later, i deployed the script on yarn with 12 Executor each with 3 cores and 7g ram. I assigned driver memory of 10g.

The script again takes too much time just to load the saved model from HDFS.

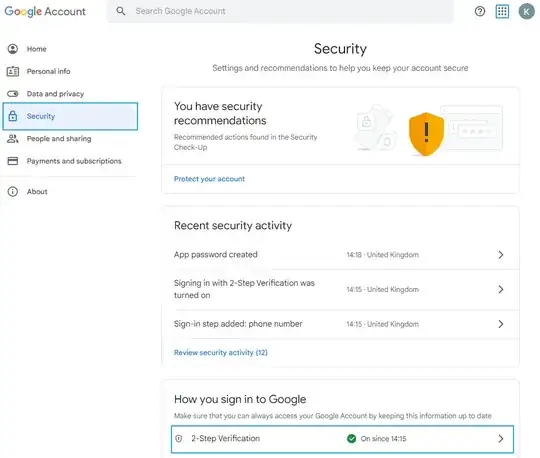

When the spark reaches at this point (see above screenshot), it takes too much time

I thought of an approach

Preloading

The approach which i thought was to somehow pre-load model one time into memory, and when the script wants to apply transformation on dataframe, i can somehow call the reference to the pretrained pipeline and use it on the go, without doing any disk i/o. I searched but i it lead to nowhere.

Please, do let me know what you think of this solution and what would be the best way to achieve this.

Resources on YARN

| NodeName | Count | RAM (each) | Cores (each) |

|---|---|---|---|

| Master Node | 1 | 38g | 8 |

| Secondary Node | 1 | 38 g | 8 |

| Worker Nodes | 4 | 24 g | 4 |

| Total | 6 | 172g | 32 |

Thanks