I want to train on my local gpu but it's only running on cpu while torch.cuda.is_available() is actually true and i can see my gpu but it runs only on cpu , so how to fix it

my CNN model:

import torch.nn as nn

import torch.nn.functional as F

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

# define the CNN architecture

class Net(nn.Module):

### TODO: choose an architecture, and complete the class

def __init__(self):

super(Net, self).__init__()

## Define layers of a CNN

self.conv1 = nn.Conv2d(3, 16, 3, padding=1)

# convolutional layer (sees 16x16x16 tensor)

self.conv2 = nn.Conv2d(16, 32, 3, padding=1)

# convolutional layer (sees 8x8x32 tensor)

self.conv3 = nn.Conv2d(32, 64, 3, padding=1)

# max pooling layer

self.pool = nn.MaxPool2d(2, 2)

# linear layer (64 * 4 * 4 -> 500)

self.fc1 = nn.Linear(64 * 28 * 28, 500)

# linear layer (500 -> 10)

self.fc2 = nn.Linear(500, 133)

# dropout layer (p=0.25)

self.dropout = nn.Dropout(0.25)

def forward(self, x):

## Define forward behavior

x = self.pool(F.relu(self.conv1(x)))

#print(x.shape)

x = self.pool(F.relu(self.conv2(x)))

#print(x.shape)

x = self.pool(F.relu(self.conv3(x)))

#print(x.shape)

#print(x.shape)

# flatten image input

x = x.view(-1, 64 * 28 * 28)

# add dropout layer

x = self.dropout(x)

# add 1st hidden layer, with relu activation function

x = F.relu(self.fc1(x))

# add dropout layer

x = self.dropout(x)

# add 2nd hidden layer, with relu activation function

x = self.fc2(x)

return x

#-#-# You so NOT have to modify the code below this line. #-#-#

# instantiate the CNN

model_scratch = Net()

# move tensors to GPU if CUDA is available

if use_cuda:

print("TRUE")

model_scratch = model_scratch.cuda()

train function :

def train(n_epochs, loaders, model, optimizer, criterion, use_cuda, save_path):

"""returns trained model"""

# initialize tracker for minimum validation loss

valid_loss_min = np.Inf

loaders_scratch = {'train': train_loader,'valid': valid_loader,'test': test_loader}

for epoch in range(1, n_epochs+1):

# initialize variables to monitor training and validation loss

train_loss = 0.0

valid_loss = 0.0

###################

# train the model #

###################

model.train()

for batch_idx, (data, target) in enumerate(loaders['train']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

## find the loss and update the model parameters accordingly

## record the average training loss, using something like

## train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

# clear the gradients of all optimized variables

optimizer.zero_grad()

# forward pass: compute predicted outputs by passing inputs to the model

output = model(data)

# calculate the batch loss

loss = criterion(output, target)

# backward pass: compute gradient of the loss with respect to model parameters

loss.backward()

# perform a single optimization step (parameter update)

optimizer.step()

# update training loss

train_loss += loss.item()*data.size(0)

######################

# validate the model #

######################

model.eval()

for batch_idx, (data, target) in enumerate(loaders['valid']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

## update the average validation loss

output = model(data)

# calculate the batch loss

loss = criterion(output, target)

# update average validation loss

valid_loss += loss.item()*data.size(0)

# calculate average losses

train_loss = train_loss/len(train_loader.dataset)

valid_loss = valid_loss/len(valid_loader.dataset)

# print training/validation statistics

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch,

train_loss,

valid_loss

))

## TODO: save the model if validation loss has decreased

if valid_loss <= valid_loss_min:

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model ...'.format(

valid_loss_min,

valid_loss))

torch.save(model.state_dict(), save_path)

valid_loss_min = valid_loss

# return trained model

return model

# train the model

loaders_scratch = {'train': train_loader,'valid': valid_loader,'test': test_loader}

model_scratch = train(100, loaders_scratch, model_scratch, optimizer_scratch,

criterion_scratch, use_cuda, 'model_scratch.pt')

# load the model that got the best validation accuracy

model_scratch.load_state_dict(torch.load('model_scratch.pt'))

while i am getting "TRUE" in torch.cuda.is_available() but still not running on GPU

i am only running on CPU

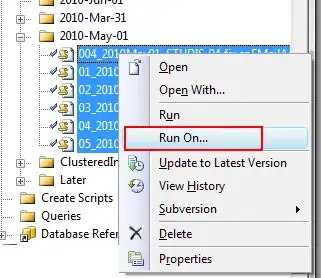

the below picture shows that i am running on cpu with 62%