I am aiming to replicate the results from the enhancing performing guide of the pandas documentation. https://pandas.pydata.org/pandas-docs/stable/user_guide/enhancingperf.html

The numba implementation of the example should be really fast, even faster than the Cython implementation. I succesfully implemented the Cython code, but although Numba should be really easy to implement (just adding a decorator right?) it is super slow, even slower than the plain python implementation. Does anyone have any idea how so?

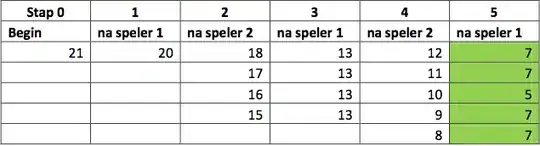

Results:

Code:

import pandas as pd

import numpy as np

import time

import numba

import cpythonfunctions

def f(x):

return x * (x - 1)

def integrate_f(a, b, N):

s = 0

dx = (b - a) / N

for i in range(N):

s += f(a + i * dx)

return s * dx

@numba.jit

def f_plain_numba(x):

return x * (x - 1)

@numba.jit(nopython=True)

def integrate_f_numba(a, b, N):

s = 0

dx = (b - a) / N

for i in range(N):

s += f_plain_numba(a + i * dx)

return s * dx

@numba.jit(nopython=True)

def apply_integrate_f_numba(col_a, col_b, col_N):

n = len(col_N)

result = np.empty(n, dtype="float64")

assert len(col_a) == len(col_b) == n

for i in range(n):

result[i] = integrate_f_numba(col_a[i], col_b[i], col_N[i])

return result

if __name__ == "__main__":

df = pd.DataFrame(

{

"a": np.random.randn(1000),

"b": np.random.randn(1000),

"N": np.random.randint(100, 1000, (1000)),

"x": "x",

}

)

start = time.perf_counter()

df.apply(lambda x: integrate_f(x["a"], x["b"], x["N"]), axis=1)

print('pure python takes {}'.format(time.perf_counter() - start))

'''

CYTHON

'''

# cythonize the functions (plain python functions to C)

start = time.perf_counter()

df.apply(lambda x: cpythonfunctions.integrate_f_plain(x["a"], x["b"], x["N"]), axis=1)

print('cython takes {}'.format(time.perf_counter() - start))

# create cdef and cpdef (typed fucntions, with int, double etc..)

start = time.perf_counter()

df.apply(lambda x: cpythonfunctions.integrate_f_typed(x["a"], x["b"], x["N"]), axis=1)

print('cython typed takes {}'.format(time.perf_counter() - start))

# In above function, most time was spend calling series.__getitem__ and series.__get_value

# ---> This stems from the apply apart. Cythonize this!

start = time.perf_counter()

cpythonfunctions.apply_integrate_f(df["a"].to_numpy(), df["b"].to_numpy(), df["N"].to_numpy())

print('cython integrated takes {}'.format(time.perf_counter() - start))

# Remove memory checks

start = time.perf_counter()

cpythonfunctions.apply_integrate_f_wrap(df["a"].to_numpy(), df["b"].to_numpy(), df["N"].to_numpy())

print('cython integrated takes {}'.format(time.perf_counter() - start))

'''

including JIT

'''

start = time.perf_counter()

apply_integrate_f_numba(df["a"].to_numpy(), df["b"].to_numpy(), df["N"].to_numpy())

print('numba.jit apply integrate takes {}'.format(time.perf_counter() - start))

Dependencies: