I'm trying to train a HAAR classifier with OpenCV 2.4 to detect the head of squash rackets. Unfortunately the results in terms of accuracy are fairly bad and I'd like to understand what part of my process is flawed. At this point I'm not worried about performance as I won't be using it as a real time detector.

Negative samples

- I used some online image database to obtain random pictures (of different widths and heights).

- I also added a couple of Squash related negative images such as empty courts, or pictures of players on courts where no racket head is visible (less than 20 in total).

Positive samples

I created a total of 4168 positive samples, of which

- 168 are manually annotated shots of game recordings

- 4000 are samples created using opencv_createsamples

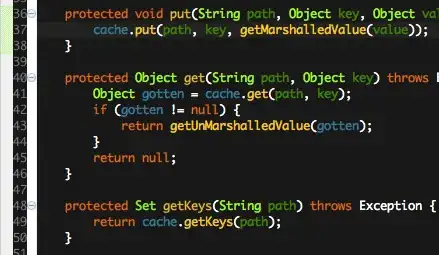

opencv_createsamples -img img/sample/r2_white.png -bg img/neg.txt -info img/generated/info.txt -pngoutput img/generated -maxxangle 0.85 -maxyangle -0.85 -maxzangle 0.85 -num 4000

I used relatively high max angles as I felt this would be more representative of how Squash rackets occur on match recordings.

Vector

After consolidating the annotations of the manually annotated and the generated samples, I created the vector with the following parameters:

opencv_createsamples -info img/pos_all.txt -num 4168 -w 25 -h 25 -vec model/vector/positives_all.vec -maxxangle 0.85 -maxyangle -0.85 -maxzangle 0.85

Training

I trained the model with the following parameters. Again added -mode ALL as I felt rotations of the features would be more representative of real world squash games.

opencv_traincascade -data ../model -vec ../model/vector/positives_all.vec -bg neg.txt -numPos 3900 -numNeg 7000 -numStages 10 -w 25 -h 25 -numThreads 12 -maxFalseAlarmRate 0.3 -mode ALL -precalcValBufSize 3072 -precalcIdxBufSize 3072

The training took about 10 hours in total but even at the 100th sample of the last stage the false alarm was still 0.84 (provided that I interpret the training output correctly). The lowest was 0.74 at the end of Stage 5.

===== TRAINING 9-stage =====

<BEGIN

POS count : consumed 3900 : 4095

NEG count : acceptanceRatio 7000 : 0.0304295

Precalculation time: 16

| N | HR | FA |

|---|---|---|

| 1 | 1 | 1 |

| 2 | 1 | 1 |

| 3 | 1 | 1 |

| 4 | 1 | 1 |

| 5 | 1 | 1 |

| 6 | 1 | 1 |

| 7 | 1 | 0.998857 |

| ... | ... | ... |

| 98 | 0.995128 | 0.840857 |

| 99 | 0.995128 | 0.850571 |

| 100 | 0.995128 | 0.842714 |

END>

Outcome

The classifier doesn't seem to do a great job, with loads of false positives and false negatives too.

I played around with the minNeighbors and scaleFactor parameters, to no avail. In the case below I'm using detectMultiScale(gray, 2, 75):

Questions

- Is my use case realistic? Could there be any reason that makes rackets particularly hard to detect?

- Are my positive samples sufficient?

- Could the angles or the lack of a transparent background in the generated samples be a problem?

- Or is the ration of manually annotated to generated samples (168:4000) insufficient?

- Is the ratio of positive and negative samples used for training (3900:7000) sufficient?

- Is my approach to training appropriate?

- Is there anything wrong with my training parameters (e.g. feature height/width in the context of racket shape)?

- What could be the reason for my false alarm rate to stagnate during training?