I installed airflow 2.0 using docker swarm and Celery Executor.

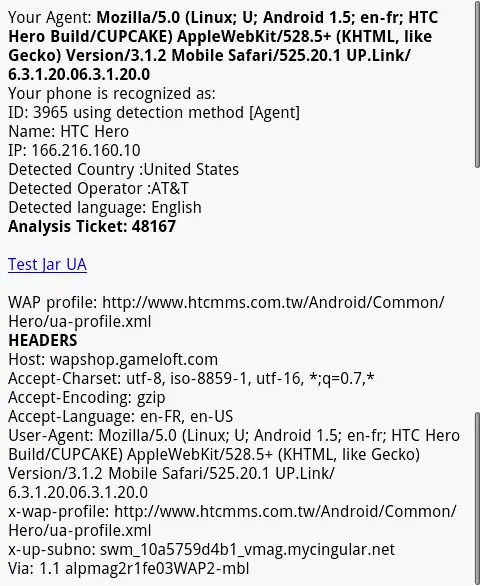

After 1 week, celery workers memory is overflowing by airflow task supervisor (screenshot attached)

Anyone faced such issues ? Any suggestions ?

Asked

Active

Viewed 739 times

3

Ganesh

- 677

- 8

- 11

-

There is a slack channel and email mailing list for Apache airflow, perhaps you could ask in those channels as well – griffin_cosgrove May 20 '21 at 13:33

-

.I solved it by setting execute_tasks_new_python_interpreter = True – Ganesh May 21 '21 at 13:13

-

Post an answer and accept it, so others can see – griffin_cosgrove May 21 '21 at 13:18

1 Answers

1

In Airflow 2.0, there are 2 ways of creating child processes.

- forking of the parent process (Fast)

- spawning a new python process using python subprocess (Slow)

By default, airflow 2.0 uses (1) method. Forking the parent process is faster. On the other hand, the child process is not killed after the task completion. Number of child processes keep increasing till the memory exhaused.

I switched to subprocess method (2) by setting execute_tasks_new_python_interpreter = True. Here, each python process is killed and everytime new process is created. This might be slow but memory is effectively utilised.

Ganesh

- 677

- 8

- 11

-

You say "On the other hand, the child process is not killed after the task completion". Why does child process have to be killed on success? On success it exists with exit code = 0. Parent process waits for its completion and then exits too. What's the difference to subprocess? – Anton Bryzgalov May 03 '22 at 20:06