When I use ranger for a classification model and treeInfo() to extract a tree, I see that sometimes a split results in two identical terminal nodes.

Is this expected behaviour? Why does it make sense to introduce a split where the final nodes are the same?

From this question, I take that the prediction variable could be the majority class (albeit for python and another random forest implementation). The ranger ?treeInfo documentation says it should be the predicted class.

MWE

library(ranger)

data <- iris

data$is_versicolor <- factor(data$Species == "versicolor")

data$Species <- NULL

rf <- ranger(is_versicolor ~ ., data = data,

num.trees = 1, # no need for many trees in this example

max.depth = 3, # keep depth at an understandable level

seed = 1351, replace = FALSE)

treeInfo(rf, 1)

#> nodeID leftChild rightChild splitvarID splitvarName splitval terminal prediction

#> 1 0 1 2 2 Petal.Length 2.60 FALSE <NA>

#> 2 1 NA NA NA <NA> NA TRUE FALSE

#> 3 2 3 4 3 Petal.Width 1.75 FALSE <NA>

#> 4 3 5 6 2 Petal.Length 4.95 FALSE <NA>

#> 5 4 7 8 0 Sepal.Length 5.95 FALSE <NA>

#> 6 5 NA NA NA <NA> NA TRUE TRUE

#> 7 6 NA NA NA <NA> NA TRUE TRUE

#> 8 7 NA NA NA <NA> NA TRUE FALSE

#> 9 8 NA NA NA <NA> NA TRUE FALSE

In this example, the last four rows (final nodes with nodeID 5 and 6, as well as 7 and 8) have the prediction TRUE and FALSE.

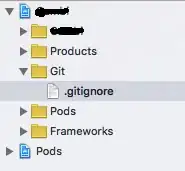

Graphically this would look like this