I'm running Jupyterhub with pyspark3 kernel on AWS EMR Cluster. As we might know Jupyterhub pyspark3 on EMR uses Livy session to run workloads on AWS EMR YARN scheduler. My question is about the configuration of spark: executor memory/cores, driver memory/cores etc.

There is already a default configuration in the config.json file of Jupyter:

...

"session_configs":{

"executorMemory":"4096M",

"executorCores":2,

"driverCores":2,

"driverMemory":"4096M",

"numExecutors":2

},

...

We can overwrite this configuration using sparkmagic:

%%configure -f

{"conf":{"spark.pyspark.python": "python3",

"spark.pyspark.virtualenv.enabled": "true",

"spark.pyspark.virtualenv.type":"native",

"spark.pyspark.virtualenv.bin.path":"/usr/bin/virtualenv",

"spark.executor.memory":"2g",

"spark.driver.memory": "2g",

"spark.executor.cores": "1",

"spark.num.executors": "1",

"spark.driver.maxResultSize": "2g",

"spark.yarn.executor.memoryOverhead": "2g",

"spark.yarn.driver.memoryOverhead": "2g",

"spark.yarn.queue": "default"

}

}

There is also the configuration in the spark-defaults.conf file in the master node of the EMR Cluster.

spark.executor.memory 2048M

spark.driver.memory 2048M

spark.yarn.driver.memoryOverhead 409M

spark.executor.cores 2

...

Which configuration will be used when I initiate a SparkSession so run a spark application in the YARN cluster ?

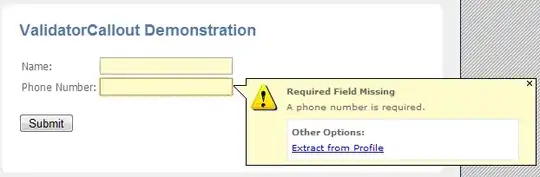

Please find the image of a running spark application on the YARN Scheduler: