Problem

I am trying to use a model which requires me to transform, very large rastersStacks (around 10 million cells) to a data.frame, I am doing this on a shared server, and because of that, I am trying to optimize to reduce the RAM used, and hopefully not reduce the speed enormously. For that, I have written 2 functions, but I have not been successful. an RPUBS with the Results of my attempts is in this link, and a github with the rmd for that is here including the rds files for the profvis profiling.

First function

First lets load the packages we will need:

# For spaital manipulation

library(raster)

# For benchmarking speed and memory

library(profvis)

# To parallelize operations

library(doParallel)

# To parallelize operations

library(foreach)

# For combination and looping

library(purrr)

# Data wranggling

library(dplyr)

library(data.table)

Tiling

The main way to reduce the RAM usage is instead of processing one big raster is to transform it into tiled rasters, for that I developed the following function:

SplitRas <- function(Raster,ppside, nclus = 1){

TempRast <- paste0(getwd(), "/Temp")

h <- ceiling(ncol(Raster)/ppside)

v <- ceiling(nrow(Raster)/ppside)

agg <- aggregate(Raster,fact=c(h,v))

agg[] <- 1:ncell(agg)

agg_poly <- rasterToPolygons(agg)

names(agg_poly) <- "polis"

r_list <- list()

if(nclus == 1){

for(i in 1:ncell(agg)){

dir.create(TempRast)

rasterOptions(tmpdir=TempRast)

e1 <- extent(agg_poly[agg_poly$polis==i,])

r_list[[i]] <- crop(Raster,e1)

if((freq(r_list[[i]], value=NA)/ncell(r_list[[i]])) != 1){

writeRaster(r_list[[i]],filename=paste("SplitRas",i,sep=""),

format="GTiff",datatype="FLT4S",overwrite=TRUE)

}

unlink(TempRast, recursive = T, force = T)

}

} else if(nclus != 1){

cl <- parallel::makeCluster(nclus)

doParallel::registerDoParallel(cl)

r_list <- foreach(i = 1:ncell(agg), .packages = c("raster")) %dopar% {

dir.create(TempRast)

rasterOptions(tmpdir=TempRast)

e1 <- extent(agg_poly[agg_poly$polis==i,])

Temp <- crop(Raster,e1)

if((raster::freq(Temp, value=NA)/ncell(Temp)) != 1){

writeRaster(Temp,filename=paste("SplitRas",i,sep=""),

format="GTiff",datatype="FLT4S",overwrite=TRUE)

}

unlink(TempRast, recursive = T, force = T)

Temp

}

parallel::stopCluster(cl)

}

}

My thought is that if you process each tile separately, you can transform into dataframes, one by one, and take out rows with NAs and thus reducing the RAM usage.

This function has 3 arguments:

- Raster: The stack you want to divide into tiles

- ppside: The number of horizontal and vertical tiles you will end up, the final number of tiles will be ppside*ppside, so if ppside is 3, you will have 9 tiles

- nclus: The number of clusters you will use for the parallelizing and speeding up your processing.

At the end of this function, you will have ppside*ppside number of tiles, saved in your working directory all called SplitRasN.tif where N is the number of the tile. Just as an example we will use the bioclimatic variables available in the raster package:

Bios <- getData('worldclim', var='bio', res=10)

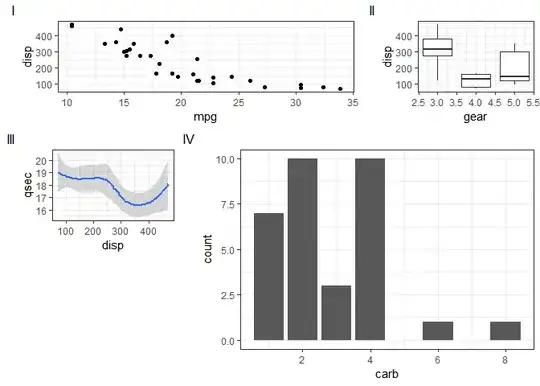

I will test this transforming this into a different number of tiles as shown in the following figure

Transformation from raster to tiles and then from tiles to data frame (Example)

so first we will use SplitRas function to get the 16 tiles using 4 cores:

SplitRas(Raster = Bios, ppside = 4, nclus = 4)

This will return the following files: r list.files(pattern = "SplitRas")

In order to get these tiles into one data frame with all the non-NA cells we need a list of the tiles, which we get with:

Files <- list.files(pattern = "SplitRas", full.names = T)

Which we can use then in the following function:

SplitsToDataFrame <- function(Splits, ncores = 1){

TempRast <- paste0(getwd(), "/Temp")

if(ncores == 1){

Temps <- list()

for(i in 1:length(Splits)){

dir.create(TempRast)

rasterOptions(tmpdir=TempRast)

Temp <- stack(Splits[i])

Temp <- as.data.frame(Temp, row.names = NULL, col.names = NULL, xy =TRUE)

colnames(Temp)[3:ncol(Temp)] <- paste0("Var", 1:length(3:ncol(Temp)))

Temps[[i]] <- Temp[complete.cases(Temp),]

gc()

unlink(TempRast, recursive = T, force = T)

message(i)

}

Temps <- data.table::rbindlist(Temps)

} else if(ncores > 1){

cl <- parallel::makeCluster(ncores)

doParallel::registerDoParallel(cl)

Temps <- foreach(i = 1:length(Splits), .packages = c("raster", "data.table")) %dopar%{

dir.create(TempRast)

rasterOptions(tmpdir=TempRast)

Temp <- stack(Splits[i])

Temp <- as.data.frame(Temp, row.names = NULL, col.names = NULL, xy =TRUE)

colnames(Temp)[3:ncol(Temp)] <- paste0("Var", 1:length(3:ncol(Temp)))

gc()

unlink(TempRast, recursive = T, force = T)

Temp[complete.cases(Temp),]

}

Temps <- data.table::rbindlist(Temps)

parallel::stopCluster(cl)

}

return(Temps)

}

Where Splits is a character vectors with the paths to the tiles, and ncores is the number of cores used for parallelization. This will result in the Data frame with the non-empty cells.

DF <- SplitsToDataFrame(Splits = Files, ncores = 4)

Memory benchmarking (What I have tried)

First I generated Tiles for 1, 2, 4, 8, 10 and 12 ppsides

sides <- c(1,2,4,8,10, 12)

Home <- getwd()

AllFiles <- list()

for(i in 1:length(sides)){

dir.create(path = paste0(Home, "/Sides_", sides[i]))

setwd(paste0(Home, "/Sides_", sides[i]))

SplitRas(Raster = Bios, ppside = sides[i], nclus = ifelse(sides[i] < 4, sides[i], 4))

AllFiles[[i]] <- list.files(pattern = "SplitRas", full.names = T) %>% stringr::str_replace_all("\\./", paste0(getwd(), "/"))

}

setwd(Home)

And then profiled the function using only the sequential option of the function

library(profvis)

P <- profvis({

P1 <- SplitsToDataFrame(Splits = AllFiles[[1]])

P2 <- SplitsToDataFrame(Splits = AllFiles[[2]])

P3 <- SplitsToDataFrame(Splits = AllFiles[[3]])

P4 <- SplitsToDataFrame(Splits = AllFiles[[4]])

P5 <- SplitsToDataFrame(Splits = AllFiles[[5]])

})

P

htmlwidgets::saveWidget(P, "profile.html")

saveRDS(P, "P.rds")

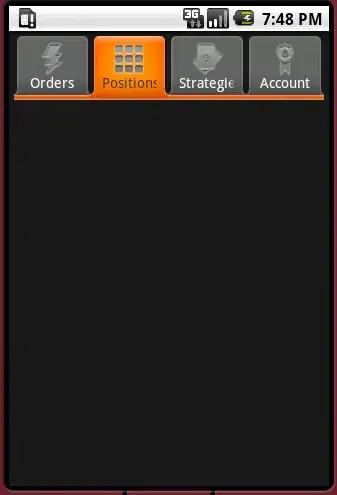

However as seen in the following figure (more detail can be found in the Rpubs linked above), RAM is more or less the same as before, but time went way up (That last part was expected).

Some extra stuff

Finally when I tried benchmarking the RAM usage in parallel it seems as if profvis does not capture this. Any idea on how to check that out?

library(profvis)

PPar <- profvis({

P1 <- SplitsToDataFrame(Splits = AllFiles[[3]], ncores = 1)

P2 <- SplitsToDataFrame(Splits = AllFiles[[3]], ncores = 2)

P3 <- SplitsToDataFrame(Splits = AllFiles[[3]], ncores = 4)

P4 <- SplitsToDataFrame(Splits = AllFiles[[3]], ncores = 7)

})

PPar

htmlwidgets::saveWidget(PPar, "profileParallel.html")

saveRDS(PPar, "PPar.rds")