I am trying to ensemble the classifiers Random forest, SVM and KNN. Here to ensemble, I'm using the VotingClassifier with GridSearchCV. The code is working fine if I try with the Logistic regression, Random Forest and Gaussian

clf11 = LogisticRegression(random_state=1)

clf12 = RandomForestClassifier(random_state=1)

clf13 = GaussianNB()

But I don't know what I was wrong in this below code cause I'm a beginner. Here is my try to work with Random forest, KNN and SVM

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import VotingClassifier

clf11 = RandomForestClassifier(n_estimators=100,criterion="entropy")

clf12 = KNeighborsClassifier(n_neighbors=best_k)

clf13 = SVC(kernel='rbf', probability=True)

eclf1 = VotingClassifier(estimators=[('lr', clf11), ('rf', clf12), ('gnb', clf13)],voting='hard')

params = {'lr__C': [1.0, 100.0], 'rf__n_estimators': [20, 200]}

grid1 = GridSearchCV(estimator=eclf1, param_grid=params, cv=30)

grid1.fit(X_train,y_train)

grid1_predicted = grid1.predict(X_test)

print('Accuracy score : {}%'.format(accuracy_score(y_test,grid1_predicted)*100))

scores_dict['Logistic-Random-Gaussian'] = accuracy_score(y_test,grid1_predicted)*100

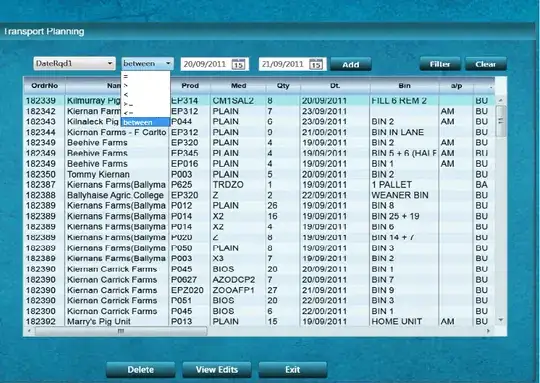

Whenever I run this I get

Invalid parameter estimator VotingClassifier.

These are the errors I'm getting.

Is it possible to ensemble Random Forest, svm and KNN?

Or else, is there any other way to do it?