I have a list of Objects that is around 300k. And I'm sending this by batch on a Web API. Since this object is a multi level I decided to send the items in batch of 1000 which converted to JSON string. At the moment, I'm seeing about 50 records inserted per second which my estimation will take around 1+ hour to complete.

In my SQL Server, I have a stored procedure that accepts JSON string and use OPENJSON. The data is inserted into a TempTable which I then reiterate using while as shown (not sure if there's a better way)

While (Select Count(*) From #temporganisations Where process_ind = 0) > 0

Begin

select top 1

@orgid=torg.orgid

from

#temporganisations torg

where torg.process_ind=0

exec AddOrganisation... retvalid out

exec AddLocation...retvalid

exec AddContact...retvalid

etc

.

.

.

delete from #temporganisations

where orgid= @orgid

end

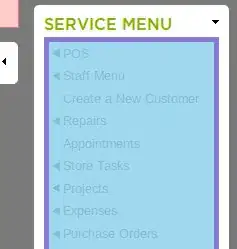

As shown, there are multiple stored procedures called within this stored procedure. Each stored procedure has duplicate checks before it performs the INSERT statements. I'm trying to speedup the process. and it's really taking very long to completed the process. So I discovered the Activity Monitor, and noticed the 'Recent Expensive Queries' as shown below shows the INSERT statements that gets called

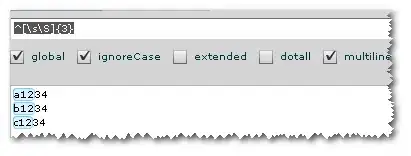

I'm just wondering if there's something I can do increase the performance on these INSERTS, here's the Execution Plan for one of the INSERT

I read somewhere that I could do the INSERT directly but the stored procedure will be HUGE since I need to add the duplicate checks. I also read about using a variable table instead of temp table but I also read an article that variable tables are meant for small datasets. Thanks in advance.

Below is the XML structure. As you can see I need to link each of the child nodes into the parent node which is the organization.

<Organisations>

<Organisation orgRecordClass="R43">

<Name>ORGANIZATION X</Name>

<Date>

<Type value="Operational" />

<Start value="1991-04-01" />

<End value="1994-03-31" />

</Date>

<OrgId root="5161f" assigningAuthorityName="H51" extension="R34" />

<Status value="Inactive" />

<LastChangeDate value="2013-05-08" />

<GeoLoc>

<Location>

<AddrLn1>458 HOMER ROAD</AddrLn1>

<Town>LONDON</Town>

<PostCode>E1 8PL</PostCode>

<Country>ENGLAND</Country>

<UPRN>21521</UPRN>

</Location>

</GeoLoc>

<Contacts>

<Contact type="tel" value=" 233344" />

<Contact type="fax" value=" 233355" />

</Contacts>

<Roles>

<Role id="R12" uniqueRoleId="1" primaryRole="true">

<Date>

<Type value="Operational" />

<Start value="1991-04-01" />

<End value="1994-03-31" />

</Date>

</Role>

</Roles>

<Rels>

<Rel id="RE6" uniqueRelId="58005">

<Date>

<Type value="Operational" />

<Start value="1991-04-01" />

<End value="1994-03-31" />

</Date>

<Status value="Inactive" />

</Rel>

</Rels>

<Succs>

<Succ uniqueSuccId="12">

<Date>

<Type value="Legal" />

<Start value="1993-04-01" />

</Date>

<Type>Successor</Type>

<Target>

<OrgId root="5161f" assigningAuthorityName="H51" extension="R561" />

<PrimaryRoleId id="R12" uniqueRoleId="37607" />

</Target>

</Succ>

<Succ uniqueSuccId="12">

<Date>

<Type value="Legal" />

<Start value="1993-04-01" />

</Date>

<Type>Successor</Type>

<Target>

<OrgId root="5161f" assigningAuthorityName="H51" extension="R561" />

<PrimaryRoleId id="R12" uniqueRoleId="37607" />

</Target>

</Succ>

</Succs>

</Organisation>