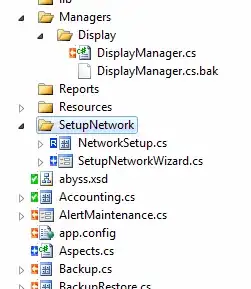

I'm using a pre-trained model from Pytorch ( Resnet 18,34,50) in order to classify images. During the training, a weird periodicity appears in the training as you can see in the image below. Did somebody already have a similar issue?In order to deal with the overfitting, I'm using Data augmentation in the preprocessing. When using SGD as an optimizer with the following parameters, we obtain this sort of graph:

- criterion: NLLLoss()

- learning rate: 0.0001

- epoch: 40

- print every 40 iteration

We also try adam and Adam bound as optimizers but the same periodicity was observed.

Thank's in advance for your answer!

Here is the code :

def train_classifier():

start=0

stop=0

start = timeit.default_timer()

epochs = 40

steps = 0

print_every = 40

model.to('cuda')

epo=[]

train=[]

valid=[]

acc_valid=[]

for e in range(epochs):

print('Currently running epoch',e,':')

model.train()

running_loss = 0

for images, labels in iter(train_loader):

steps += 1

images, labels = images.to('cuda'), labels.to('cuda')

optimizer.zero_grad()

output = model.forward(images)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if steps % print_every == 0:

model.eval()

# Turn off gradients for validation, saves memory and computations

with torch.no_grad():

validation_loss, accuracy = validation(model, val_loader, criterion)

print("Epoch: {}/{}.. ".format(e+1, epochs),

"Training Loss: {:.3f}.. ".format(running_loss/print_every),

"Validation Loss: {:.3f}.. ".format(validation_loss/len(val_loader)),

"Validation Accuracy: {:.3f}".format(accuracy/len(val_loader)))

stop = timeit.default_timer()

print('Time: ', stop - start)

acc_valid.append(accuracy/len(val_loader))

train.append(running_loss/print_every)

valid.append(validation_loss/len(val_loader))

epo.append(e+1)

running_loss = 0

model.train()

return train,epo,valid,acc_valid