I am trying to understand GPR, and I am testing it to predict some values. The response is the first component of a PCA, so it has relatively good data without outliers. The predictors also come from a PCA(n=2), and buth predictors columns has been standarized with StandardScaler().fit_transform, as I saw it was better in previous posts. Since the predictors are standarized, I am using a RBF kernel and mutiplying it by 1**2, and let the hyperparameters fit. The thing is that the model fits perfectly to predictors, and gives almost constant values for the test data. The set is a set of 463 points, and no matter if I randomize 20-100 or 200 for the train data, adding Whitekernel() or alpha values, I have the same result. I am almost certain that I am doing something wrong, but I can't find what, any help? Here's relevant chunk of code and the responses:

k1 = cKrnl(1**2,(1e-40, 1e40)) * RBF(2, (1e-40, 1e40))

k2 = cKrnl(1**2,(1e-40, 1e40)) * RBF(2, (1e-40, 1e40))

kernel = k1 + k2

gp = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=10,normalize_y = True)

gp.fit(x_train, y_train)

print("GPML kernel: %s" % gp.kernel_)

Output :

GPML kernel: 1**2 * RBF(length_scale=0.000388) + 8.01e-18**2 * RBF(length_scale=2.85e-18)

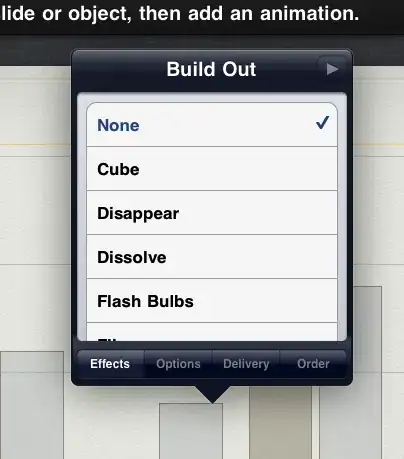

Training data:

Thanks to all!!!