I have an application where I am loading raster data into a Dask array and then I only need to process the chunks which overlap with some region of interest. I know that I can create a Dask masked array, but I am looking for a way to prevent certain chunks from being processed at all – as some of the ROIs contain multiple polygons which are very far apart and thus 90% of the chunks will be discarded in the end.

A simple example would be, as below, where arr2 contains no information at all, but is needed for alignment of the other chunks.

import numpy as np

import dask.array as da

arr0 = da.from_array(np.arange(1, 26).reshape(5,5), chunks=(5, 5))

arr1 = da.from_array(np.arange(25, 50).reshape(5,5), chunks=(5, 5))

arr2 = da.from_array(np.zeros((5,5)), chunks=(5, 5))

arr3 = da.from_array(np.arange(75, 100).reshape(5,5), chunks=(5, 5))

a = da.block([[arr0, arr1],[arr2, arr3]])

b = da.ma.masked_equal(a, 0)

c = da.min(b)

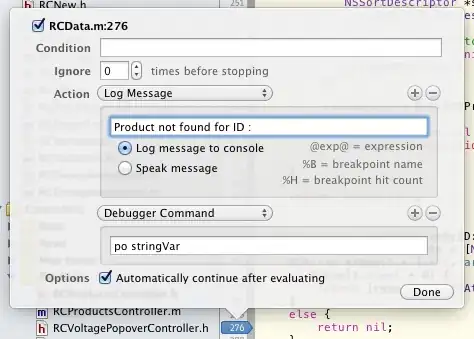

c.visualize()

We can see by plotting the graph that arr2 is still in the computational graph, furthermore, it is taking up memory as it will still be evaluated even though it is masked. What I'd like to achieve is a way to mask the entire chunk/block such that it is just ignored in computation all together.