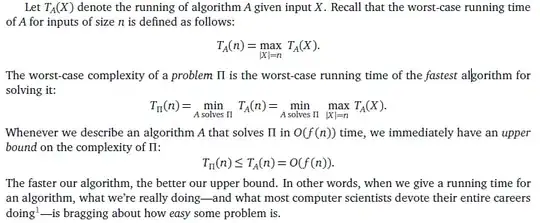

Currently posted answers touch on different topic or does not seem to be fully correct.

Adding trigger rule all_failed to Task-C won't work for OP's example DAG: A >> B >> C unless Task-A ends in failed state, which most probably is not desirable.

OP was, in fact, very close because expected behavior can be achieved with mix of AirflowSkipException and none_failed trigger rule:

from datetime import datetime

from airflow.exceptions import AirflowSkipException

from airflow.models import DAG

from airflow.operators.dummy import DummyOperator

from airflow.operators.python import PythonOperator

with DAG(

dag_id="mydag",

start_date=datetime(2022, 1, 18),

schedule_interval="@once"

) as dag:

def task_b():

raise AirflowSkipException

A = DummyOperator(task_id="A")

B = PythonOperator(task_id="B", python_callable=task_b)

C = DummyOperator(task_id="C", trigger_rule="none_failed")

A >> B >> C

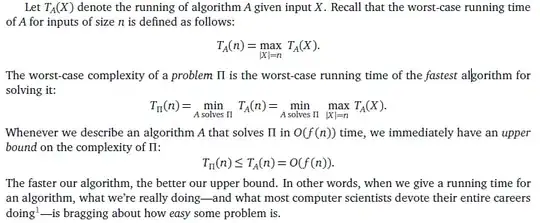

which Airflow executes as follows:

What this rule mean?

Trigger Rules

none_failed: All upstream tasks have not failed or upstream_failed -

that is, all upstream tasks have succeeded or been skipped

So basically we can catch the actual exception in our code and raise mentioned Airflow exception which "force" task state change from failed to skipped.

However, without the trigger_rule argument to Task-C we would end up with Task-B downstream marked as skipped.