I am training PyTorch models on a Azure Databricks cluster (on a notebook), using PyTorch Lightning, and doing the tracking using mlflow.

I would like to store training metrics + artifacts on the Databricks-hosted tracking server.

To enable that, code is as follows:

mlflow.pytorch.autolog()

trainer = pl.Trainer(gpus=1, max_epochs=30, callbacks=[EarlyStopping(monitor='val_loss', patience = 6)], progress_bar_refresh_rate=0)

trainer.fit(classifier, train_dl, valid_dl)

print("Done")

However, the notebook cell gets stuck in a "Running command..." state for way too long:

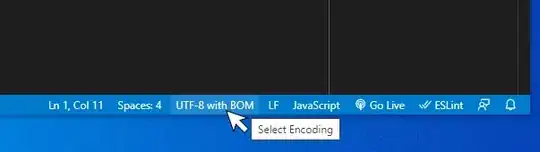

even though in the driver logs execution seems to have ended:

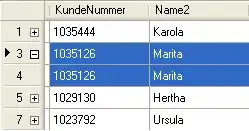

and the experiment is marked as FINISHED in the mlflow UI:

Stopping execution manually doesn't solve either, as the cell would stay in a "Cancelling..." state forever. So the only option left is to clear the cluster state.

This is a problem because I can't execute further commands that would be useful for artifact logging:

mapping.to_json("/tmp/mapping.json", orient = "records")

mlflow.log_artifact("/tmp/mapping.json", "mapping")

torch.save(classifier.state_dict(), "/tmp/model.pt")

mlflow.log_artifact("/tmp/model.pt", "model.pt")

This problem seems to correlate with GC problems:

2021-04-22T07:58:58.025+0000: [GC (Allocation Failure) [PSYoungGen: 28399104K->100290K(28499456K)] 28696488K->397698K(85935104K), 0.0755720 secs] [Times: user=0.15 sys=0.06, real=0.08 secs] 2021-04-22T08:01:01.645+0000: [GC (System.gc()) [PSYoungGen: 4522724K->54360K(28561920K)] 4820132K->351776K(85997568K), 0.0237712 secs] [Times: user=0.09 sys=0.01, real=0.02 secs] 2021-04-22T08:01:01.669+0000: [Full GC (System.gc()) [PSYoungGen: 54360K->0K(28561920K)] [ParOldGen: 297416K->123173K(57435648K)] 351776K->123173K(85997568K), [Metaspace: 203356K->203325K(219136K)], 0.3513905 secs] [Times: user=0.99 sys=0.00, real=0.36 secs]

Am I doing something wrong? Should I track experiments + artifacts in another way? Running neither single-node nor cluster works, nor reducing the size of the training set.