I currently have a project using spark. For this project we are calculating some averages on a DataSet as follows:

public void calculateAverages() {

this.data.show();

String format = "HH";

// Get the dataset such that the time column only contains the hour.

Dataset<Row> df = this.data.withColumn("Time", functions.from_unixtime(functions.col("Time").divide(1000), format));

df.show();

// Group rows by the hour (HH).

RelationalGroupedDataset df_grouped = df.groupBy("Time");

// Calculate averages for each column.

Dataset<Row> df_averages = df_grouped.agg(

functions.avg(column_names[0]),

functions.avg(column_names[1]),

functions.avg(column_names[2]),

functions.avg(column_names[3]),

functions.avg(column_names[4]),

functions.avg(column_names[5]),

functions.avg(column_names[6])

);

// Order the rows from 00 to 24.

Dataset<Row> df_ordered = df_averages.orderBy(functions.asc("Time"));

// Show in console.

df_ordered.show();

}

Here this.data is defined as Dataset<PowerConsumptionRow> data where PowerConsumptionRow is a custom class.

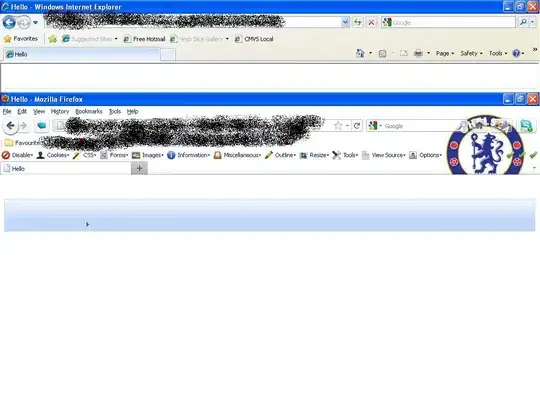

For this code I was expecting the operations groupBy, agg and orderBy to show up as stages in the spark user interface. However, as can be seen below, only the show() operations are showing:

Is there a reason why these operations are not showing up ? The operations are all performed successfully since the output of show() is correct.