I have a SQL Server database, in which I'm migrating to AWS S3 in the parquet format to build a data lake. I'm using Apache Airflow to automate this task using DAGS. Each table on schema, in this case, becomes a .parquet file, this serves for the S3 to become a Data Lake and thus be able to use AWS Athena a posteriori and/or to be further indexed in ElasticSearch.

There are some very large tables and the migration task for these tables obviously I hope it to take more time. For python, the only library that I found that connects with Microsoft SQL Server is pyodbc, which is officially developed and maintained by Microsoft.

For such large tables (order of 60 million registers) using cursor.fetchall() was taking too long and resulting in error because the task seems to being killed by Airlfow by a SIGNALKILL.

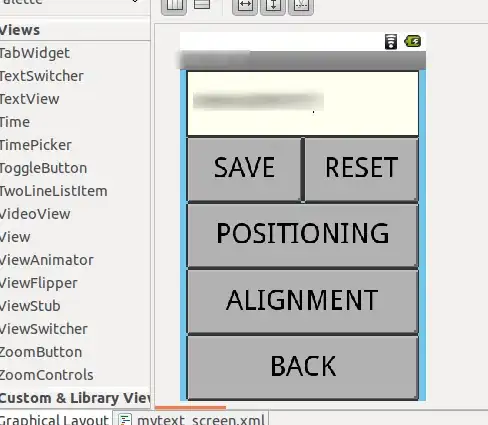

Bellow is an example of how big is the DAG (only part of it):

For fetching all tables in a given schema I'm using the following SQL Server query:

SELECT TABLE_NAME

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_TYPE = 'BASE TABLE' AND TABLE_CATALOG='{}';

on the brackets, I use the .format() function in Python to insert the schema name and retrieve the tables for dynamically build the DAG structure. I have changed my python code to fetch the data in such large tables in batches, in order to minimize any potential data overflow:

def stream(cursor, batch_size=50000):

while True:

row = cursor.fetchmany(batch_size)

if row is None or not row:

break

yield row

def fetch_data(query, schema, filename, remote_path, save_locally=False):

cnxn = pyodbc.connect(driver='Here I Put the ODBC Driver Name',

host='Host for de SQL Server DB',

database='Nameof the DB Schema',

user='User for Auth in the DB',

password='Pass for Auth in the DB')

print('Connetciton stabilished with {} ..'.format(schema))

cursor = cnxn.cursor()

print('Initializing cursor ...')

print('Requestin query {} ..'.format(query))

cursor.execute(query)

print('Query fetched for {} ..'.format(schema))

row_batch = stream(cursor)

print('Getting Iterator ...')

cols = cursor.description

cols = [col[0] for col in cols]

print('Creating batch data_frame ..')

data_frame = pd.DataFrame(columns=cols)

start_time = time.time()

for rows in row_batch:

batch_df = pd.DataFrame.from_records(rows, columns=cols)

data_frame = data_frame.append(batch_df, ignore_index=True)

batch_df = None

print("-- Batch inserted in %s seconds --" % (time.time() - start_time))

start_time = time.time()

cnxn.close()

print('Connetciton closed ..')

// other code to convert to .parquet and send to S3

save_to_bucket(data_frame, remote_path)

return 'FETCHING DATA'

That strategy seems to work quite all for 96% of the entire tables of the schema, the thing is, as I said before, when the table is very large, around 60 million records, the task runs for a while, about 30 minutes, but normally after that, Airflow kills the task, just like that. No connections error, neither the python exception or nothing. The only thing that show in terminal of the scheduler is:

[2021-04-17 23:03:59,719] {scheduler_job.py:1199} INFO - Executor reports execution of ORTOCLIN_TO_S3.FETCHING_HISTORICORESUMO_DATA execution_date=2021-04-17 20:00:17.426578+00:00 exited with status success for try_number 1

[2021-04-17 23:05:02,050] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:10:02,314] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:15:02,666] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:20:03,226] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:25:03,868] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:30:04,346] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:35:04,853] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

[2021-04-17 23:40:05,324] {scheduler_job.py:1834} INFO - Resetting orphaned tasks for active dag runs

And on the Airflow logs for the task we have simply:

Is there any workaround for that? Please help me!