I have a python package (created in PyCharm) that I want to run on Azure Databricks. The python code runs with Databricks from the command line of my laptop in both Windows and Linux environments, so I feel like there are no code issues.

I've also successfully created a python wheel from the package, and am able to run the wheel from the command line locally.

Finally I've uploaded the wheel as a library to my Spark cluster, and created the Databricks Python object in Data Factory pointing to the wheel in dbfs.

When I try to run the Data Factory Pipeline, it fails with the error that it can't find the module that is the very first import statement of the main.py script. This module (GlobalVariables) is one of the other scripts in my package. It is also in the same folder as main.py; although I have other scripts in sub-folders as well. I've tried installing the package into the cluster head and still get the same error:

ModuleNotFoundError: No module named 'GlobalVariables'Tue Apr 13 21:02:40 2021 py4j imported

Has anyone managed to run a wheel distribution as a Databricks Python object successfully, and did you have to do any trickery to have the package find the rest of the contained files/modules?

Your help greatly appreciated!

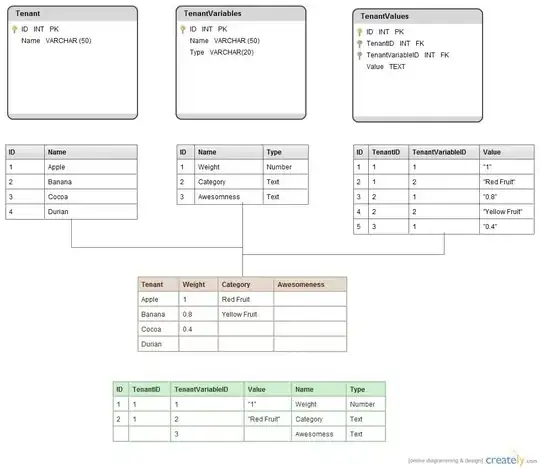

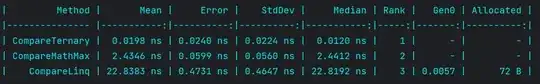

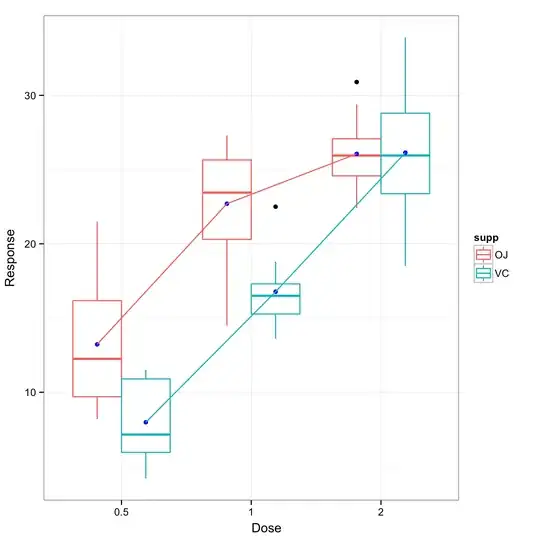

Configuration screen grabs: